System of linear equations

In linear algebra, a system of linear equations (LGS for short) is a set of linear equations with one or more unknowns that are all supposed to be satisfied simultaneously.

For example, a corresponding system for three unknowns

For

In general, a linear system of equations with

Linear systems of equations are called homogeneous if all

Geometric interpretation

With the help of the scalar product of vectors, each of the m equations of a linear system of equations can be interpreted geometrically as the normal form of a hyperplane in an n-dimensional vector space, where n is the number of variables or unknowns. Special cases of hyperplanes are straight lines in the plane and planes in space.

The i-th equation of a linear system of equations (i = 1,...,m)

can be represented as

Thus the solvability of a linear system of equations can be traced back to an intersection problem of hyperplanes: The set of common points of all hyperplanes is sought.

Example

Linear systems of equations often arise as models of practical tasks. A typical example from school mathematics is as follows:

"A father and a son are 62 years old together. Six years ago, the father was four times as old as the son was then. How old is each?"

It can also be described by the following system of linear equations:

The variable

To solve this system of equations, a variety of solution methods can be used (see solution methods). The addition method is used here as an example. To first eliminate the variable

The resulting equation is

By solving the equation according to the variable

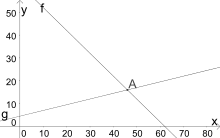

The task can also be solved geometrically by interpreting the two lines of the linear equation system as straight line equations. In doing so, the variable v is designated as x and the variable s as y and both equations are solved for y:

The intersection of the two lines is the point

The graphs of the question intersecting at point A(46;16).

Matrix form

For the treatment of linear systems of equations it is useful to combine all coefficients

Furthermore, all unknowns and the right-hand side of the equation system can also be combined into single-column matrices (these are column vectors):

Thus, a linear system of equations using matrix-vector multiplication can be written as follows

Both the coefficients

To define a linear system of equations, it is not necessary to specify the unknowns. It is sufficient to specify the expanded coefficient matrix, which is created when a column with the right-hand side

Solubility

A vector

- The linear system of equations has no solution, i.e. the solution set is the empty set.

- The linear system of equations has exactly one solution, i.e. the solution set contains exactly one element.

- The linear system of equations has infinitely many solutions. In this case, the solution set contains an infinite number of n-tuples that satisfy all equations of the system.

Over a finite body, the number of solutions is a power of the thickness of

Examples of solvability with geometric interpretation (intersection of two straight lines in the plane)

The two equations of the linear system of equations are each interpreted as the normal form of a straight line equation in the plane.

- Clear solution:

The two equations of the straight line are:

The two normal vectors are not collinear, so the two straight lines are neither parallel nor identical and therefore intersect at a point.

The intersection

- No solution:

The corresponding straight line equations are

The normal vectors are collinear. Since the two straight lines are not identical, they are parallel.

There are therefore no intersection points and therefore no solution. The solution set is empty:

- An infinite number of solutions:

The two equations of the straight line are:

The normal vectors are collinear, the two straight lines are identical.

There are therefore infinitely many intersections and thus also infinitely many solutions. The solution set is

Corresponding considerations can also be applied to planes in space or hyperplanes in n-dimensional vector space.

Solvability criteria

A linear system of equations is solvable if the rank of the coefficient matrix is

For a quadratic system of equations, i.e. in the case

In particular, systems of equations with more equations than unknowns, so-called overdetermined systems of equations, often have no solution. For example, the following system of equations has no solution because

Approximate solutions of overdetermined equation systems are then usually defined and determined via the balancing calculation.

That a linear system of equations has infinitely many solutions can only occur if there are fewer linearly independent equations as unknowns and the underlying body

Solution set

The solution set of a linear system of equations consists of all vectors

If there is a homogeneous linear system of equations, its solution set

If the solution set of an inhomogeneous linear system of equations is non-empty, then it is an affine subspace of

The solution set of a linear system of equations does not change when one of the three elementary row transformations is performed:

- Swap two lines

- Multiplying a row by a non-zero number

- Adding a row (or the multiple of a row) to another row

The solution set of a quadratic linear system of equations does not change even if the system of equations is multiplied by a regular matrix.

Determination via the extended coefficient matrix

The form of the solution set can basically be determined with the help of the extended coefficient matrix by putting it into step form with the help of elementary row transformations (see Gaussian method):

In order to always obtain exactly this form, one must sometimes also carry out column swaps. Column swaps change the order of the variables, which must be taken into account at the end. Furthermore, it is also assumed here that the coefficients

The number of solutions can then be read from the

- If at least one of the

non-zero, there is no solution.

- If all are

equal to zero (or

), then holds:

- If

then the system of equations is uniquely solvable.

- If

there are infinitely many solutions. The solution space has the dimension

.

By further elementary row transformations (see Gauss-Jordan method) the matrix can be brought into the following form:

If there is a solution at all (

Here

Forms of systems of equations

Linear systems of equations can exist in forms in which they can be easily solved. In many cases, arbitrary systems of equations are given an appropriate form by means of an algorithm in order to subsequently find a solution.

Square

We speak of a quadratic system of equations when the number of unknowns is equal to the number of equations. A system of equations of this form can be solved uniquely if the rows or columns are linearly independent (solution methods are discussed below).

Step shape, stair shape

In the step form (also row step form, row normal form, step form, stair step form or stair normal form), the number of unknowns is reduced by at least one in each row, which then also no longer occurs in the following rows. By applying the Gaussian elimination method, any system of equations can be brought into this form.

Example (the coefficients of omitted elements are

Linear systems of equations in step form can be solved by inserting them backwards (back substitution). Starting with the last line, the unknown is calculated and the result obtained is inserted into the line above in order to calculate the next unknown.

Solution of the above example:

- Resolve the second line to

- Insert

into the first line:

- Resolve the first line to

- With

all vectors of the form are

solutions of the system of equations.

Triangular shape

The triangular form is a special case of the step form, where each line has exactly one less unknown than the previous one. This means that all coefficients

Example (the coefficients of omitted elements are

Like linear systems of equations in step form, those in triangular form can also be solved by substituting backwards.

Reduced step form

The reduced step form (also normalised row step form) is a special case of the step form. In this form, the first unknowns of each row occur only once and have the coefficient

Example (the coefficients of omitted elements are

The solution of the linear system of equations can now be read off directly: Provided

Other forms

Relevant in practice are the special cases of sparse matrices (very large matrices with relatively few non-zero elements) and band matrices (likewise large matrices whose non-vanishing elements are concentrated around the main diagonal), which can be handled with specially adapted solution methods (see below).

Solution procedure

The methods for solving systems of linear equations are divided into iterative and direct methods. Examples of direct methods are the substitution method, the equation method and the addition method for simple systems of equations as well as the Gaussian elimination method based on the addition method, which brings a system of equations to step form. A variant of the Gaussian method is the Cholesky decomposition, which only works for symmetrical, positive definite matrices. The QR decomposition requires twice as much effort as the Gaussian method, but is more stable. Cramer's rule uses determinants to generate formulae for solving a quadratic linear system of equations if it is uniquely solvable. However, it is not suitable for numerical calculation due to the high computational effort involved.

Iterative methods are, for example, the Gauss-Seidel and Jacobi methods, which belong to the class of splitting methods. These do not converge for every matrix and are very slow for many practical problems. More modern methods are, for example, preconditioned Krylov subspace methods, which are very fast especially for large sparse matrices, as well as multigrid methods for solving systems that originate from the discretisation of certain partial differential equations.

In applications (e.g. geodesy), measurements of different types are often made and, to reduce the effect of measurement errors, more measurements are made than unknowns to be determined. Each measurement provides an equation for determining the unknowns. If these equations are not all linear, the system of equations is linearised using known approximations of the unknowns. Then, instead of the actual unknowns, their small deviations from the approximate values are to be determined. As a rule, the equations contradict each other if there are more equations than unknowns, so that there is no strict solution. As a way out, a solution is then usually determined by balancing using the method ofleast squares, which typically does not fulfil any equation exactly, but gives an optimal approximation of the "true" measured quantities under reasonable assumptions about the measurement errors.

The best currently known asymptotic upper bound on arithmetic operations to solve an arbitrary system of linear equations is provided by a practically inapplicable algorithm by Don Coppersmith and Shmuel Winograd in 1990 that solves an

Almost singular linear systems of equations can be solved passably in a numerical way by singular value decomposition.

Search within the encyclopedia