Normal distribution

The normal or Gaussian distribution (after Carl Friedrich Gauss) is an important type of continuous probability distribution in stochastics. Its probability density function is also called Gaussian function, Gaussian normal distribution, Gaussian distribution curve, Gaussian curve, Gaussian bell curve, Gaussian bell function, Gaussian bell or simply bell curve.

The special significance of the normal distribution is based, among other things, on the central limit theorem, according to which distributions that result from additive superposition of a large number of independent influences are approximately normally distributed under weak conditions. The family of normal distributions forms a location-scale family.

The deviations of the measured values of many natural, economic and engineering processes from the expected value can be described by the normal distribution (often logarithmic normal distribution for biological processes) in a very good approximation (especially processes that act in several factors independently in different directions).

Random variables with normal distribution are used to describe random processes such as:

- random scattering of measured values,

- random deviations from the nominal dimension during the production of workpieces,

- Description of Brownian molecular motion.

In actuarial science, the normal distribution is suitable for modeling loss data in the range of medium loss amounts.

In measurement technology, a normal distribution is often used to describe the scatter of measured values.

The standard deviation σ

- In the interval of deviation

from the expected value 68.27 % of all measured values are found,

- In the interval of deviation

from the expected value 95.45 % of all measured values can be found,

- In the interval of deviation

from the expected value 99.73 % of all measured values can be found.

And, conversely, the maximum deviations from the expected value can be found for given probabilities:

- 50% of all measured values have a deviation of at most

from the expected value,

- 90% of all measured values have a deviation of at most

from the expected value,

- 95% of all measured values have a deviation of at most

from the expected value,

- 99% of all measured values have a deviation of at most

from the expected value.

Thus, in addition to the expected value, which can be interpreted as the center of gravity of the distribution, the standard deviation can also be assigned a simple meaning with respect to the magnitudes of the occurring probabilities or frequencies.

Definition

A continuous random variable

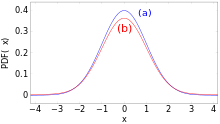

The graph of this density function has a "bell shape" and is symmetric with the parameter μ

The probability density of a normally distributed random variable does not have a definite integral that is solvable in closed form, so probabilities must be calculated numerically. The probabilities can be calculated using a standard normal distribution table that uses a standard form. To see this, use the fact that a linear function of a normally distributed random variable is itself normally distributed again. Specifically, if

which is also called standard normally distributed random variable

Their course is shown graphically opposite.

The multidimensional generalization can be found in the article multidimensional normal distribution.

Properties

Distribution function

The distribution function of the normal distribution is given by

is given. If by substitution instead of

Here is

Using the error function

Symmetry

The graph of probability density

Maximum value and inflection points of the density function

The first and second derivatives can be used to determine the maximum value and the inflection points. The first derivative is

Thus, the maximum of the density function of the normal distribution is at

The second derivative is

Thus, the inflection points of the density function are at

Standardization

It is important that the total area under the curve is equal to

Any normal distribution is in fact normalized, because using the linear substitution

For the normality of the latter integral, see error integral.

Calculation

Since

Expected value

The expected value of the standard normal distribution is

since the integrand is integrable and point symmetric.

Now if

Variance and other measures of dispersion

The variance of the

An elementary proof is attributed to Poisson.

The mean absolute deviation is

Standard deviation of the normal distribution

One-dimensional normal distributions are

Scattering intervals

From the standard normal distribution table, it can be seen that for normally distributed random variables, in each case approximately

68.3% of realizations in the interval μ

95.4% in the interval μ

99.7 % in the interval μ

lie. Since in practice many random variables are approximately normally distributed, these values from the normal distribution are often used as a rule of thumb. For example, σ

However, this practice is not recommended because it can lead to very large errors. For example, the distribution

Values outside of two to three times the standard deviation are often treated as outliers. Outliers can be an indication of gross errors in data collection. However, the data may also be based on a highly skewed distribution. On the other hand, with a normal distribution, on average about every 20th measured value lies outside the twofold standard deviation and about every 500th measured value lies outside the threefold standard deviation.

Since the proportion of values outside the sixfold standard deviation becomes vanishingly small at around 2 ppb, such an interval is considered a good measure of almost complete coverage of all values. This is used in quality management by the Six Sigma method, in that the process requirements

| Expected proportions of the values of a normally distributed random variable within or outside the scatter intervals | ||||

| | Percent within | Percent outside | ppb outside | Fraction outside |

| 0.674490 σ | 50 % | 50 % | 500.000.000 | 1 / 2 |

| 0.994458 σ | 68 % | 32 % | 320.000.000 | 1 / 3,125 |

| 1 σ | 68,268 9492 % | 31,731 0508 % | 317.310.508 | 1 / 3,151 4872 |

| 1.281552 σ | 80 % | 20 % | 200.000.000 | 1 / 5 |

| 1.644854 σ | 90 % | 10 % | 100.000.000 | 1 / 10 |

| 1.959964 σ | 95 % | 5 % | 50.000.000 | 1 / 20 |

| 2 σ | 95,449 9736 % | 4,550 0264 % | 45.500.264 | 1 / 21,977 895 |

| 2.354820 σ | 98,146 8322 % | 1,853 1678 % | 18.531.678 | 1 / 54 |

| 2.575829 σ | 99 % | 1 % | 10.000.000 | 1 / 100 |

| 3 σ | 99,730 0204 % | 0,269 9796 % | 2.699.796 | 1 / 370,398 |

| 3.290527 σ | 99,9 % | 0,1 % | 1.000.000 | 1 / 1.000 |

| 3.890592 σ | 99,99 % | 0,01 % | 100.000 | 1 / 10.000 |

| 4 σ | 99,993 666 % | 0,006 334 % | 63.340 | 1 / 15.787 |

| 4.417173 σ | 99,999 % | 0,001 % | 10.000 | 1 / 100.000 |

| 4.891638 σ | 99,9999 % | 0,0001 % | 1.000 | 1 / 1.000.000 |

| 5 σ | 99,999 942 6697 % | 0,000 057 3303 % | 573,3303 | 1 / 1.744.278 |

| 5.326724 σ | 99,999 99 % | 0,000 01 % | 100 | 1 / 10.000.000 |

| 5.730729 σ | 99,999 999 % | 0,000 001 % | 10 | 1 / 100.000.000 |

| 6 σ | 99,999 999 8027 % | 0,000 000 1973 % | 1,973 | 1 / 506.797.346 |

| 6.109410 σ | 99,999 9999 % | 0,000 0001 % | 1 | 1 / 1.000.000.000 |

| 6.466951 σ | 99,999 999 99 % | 0,000 000 01 % | 0,1 | 1 / 10.000.000.000 |

| 6.806502 σ | 99,999 999 999 % | 0,000 000 001 % | 0,01 | 1 / 100.000.000.000 |

| 7 σ | 99,999 999 999 7440 % | 0,000 000 000 256 % | 0,002 56 | 1 / 390.682.215.445 |

The probabilities

![[\mu -z\sigma ;\mu +z\sigma ]](https://www.alegsaonline.com/image/a2853d29534da7711f5c3f5b91adcebc26ab18c3.svg)

Where

Conversely, for given can be given by

the limits of the associated scattering interval ![[\mu -z\sigma ;\mu +z\sigma ]](https://www.alegsaonline.com/image/a2853d29534da7711f5c3f5b91adcebc26ab18c3.svg)

An example (with variation range)

Human height is approximately normally distributed. In a sample of 1,284 girls and 1,063 boys between the ages of 14 and 18, the average height of girls was measured to be 166.3 cm (standard deviation 6.39 cm) and the average height of boys was measured to be 176.8 cm (standard deviation 7.46 cm).

Accordingly, above range of variation suggests that 68.3% of girls have height in the range 166.3 cm ± 6.39 cm and 95.4% in the range 166.3 cm ± 12.8 cm,

- 16% [≈ (100% - 68.3%)/2] of girls are shorter than 160 cm (and 16% correspondingly taller than 173 cm); and

- 2.5% [≈ (100% - 95.4%)/2] of girls shorter than 154 cm (and 2.5% correspondingly taller than 179 cm).

For boys, 68% can be expected to have a height in the range 176.8 cm ± 7.46 cm and 95% in the range 176.8 cm ± 14.92 cm,

- 16% of boys smaller than 169 cm (and 16% larger than 184 cm) and

- 2.5% of boys are shorter than 162 cm (and 2.5% taller than 192 cm).

Coefficient of variation

From the expected value μ

Skew

The skewness

Camber

The kurtosis is also

Cumulants

The cumulant generating function is

Thus the first cumulant is κ

Characteristic function

The characteristic function for a standard normally distributed random variable

For a random variable

Moment generating function

The moment generating function of the normal distribution is

Moments

Let the random variable

| Order | Moment | central moment |

| | | |

| 0 | | |

| 1 | | |

| 2 | | |

| 3 | | |

| 4 | | |

| 5 | | |

| 6 | | |

| 7 | | |

| 8 | | |

All central moments μ

double faculty was used in the process:

Also for

Invariance to convolution

The normal distribution is invariant to the convolution, i.e., the sum of independent normally distributed random variables is again normally distributed (see also under stable distributions or under infinitely divisible distributions). Thus, the normal distribution forms a convolution semigroup in its two parameters. An illustrative formulation of this fact is: The convolution of a Gaussian curve of half-width Γ

So if are

then their sum is also normally distributed:

This can be shown, for example, by using characteristic functions, using that the characteristic function of the sum is the product of the characteristic functions of the summands (cf. convolution theorem of the Fourier transform).

Given more generally

in particular, the sum of the random variables is again normally distributed

and the arithmetic mean also

According to Cramér's theorem, even the reverse is true: If a normally distributed random variable is the sum of independent random variables, then the summands are also normally distributed.

The density function of the normal distribution is a fixed point of the Fourier transform, i.e., the Fourier transform of a Gaussian curve is again a Gaussian curve. The product of the standard deviations of these corresponding Gaussian curves is constant; the Heisenberg uncertainty principle applies.

Entropy

The normal distribution has entropy:

Since for given expected value and given variance it has the largest entropy among all distributions, it is often used as a priori probability in the maximum entropy method.

Normal distribution (a) and contaminated normal distribution (b)

Relationships with other distribution functions

Transformation to standard normal distribution

A normal distribution with any μ

In it is

If

to a standard normally distributed random variable

Geometrically, the substitution performed corresponds to an areal transformation of the bell curve of

Approximation of the binomial distribution by the normal distribution

→ Main article: Normal approximation

The normal distribution can be used to approximate the binomial distribution if the sample size is sufficiently large and the proportion of the sought property in the population is neither too large nor too small (Moivre-Laplace theorem, central limit theorem, for experimental confirmation see also under Galton board).

a Bernoulli experiment with

This binomial distribution can be approximated by a normal distribution if

Thus, for the standard deviation σ

If this condition is not satisfied, the inaccuracy of the approximation is still acceptable if

The following approximation is then useful:

In the normal distribution, the lower limit is reduced by 0.5 and the upper limit is increased by 0.5 to ensure a better approximation. This is also called "continuity correction". Only if σ

Since the binomial distribution is discrete, some points must be taken into account:

- The difference between

{\displaystyle

(as well as between greater than and greater than or equal to) must be taken into account (which is not the case for the normal distribution). Therefore, for

next smallest natural number must be chosen, i. e.

so that the normal distribution can be used for further calculations.

For example,

- Besides

and can thus be calculated by the formula given above.

The great advantage of the approximation is that very many levels of a binomial distribution can be determined very quickly and easily.

Relationship to Cauchy distribution

The quotient of two stochastically independent

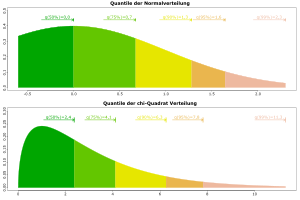

Relationship to Chi-Square Distribution

The square of a normally distributed random variable has a chi-squared distribution with one degree of freedom. Thus, if

From this follows with independent and standard normally distributed random variables

Other relationships are:

- The sum

with

and

independent normally distributed random variables

satisfies a chi-squared distribution

with

degrees of freedom.

- As the number of degrees of freedom increases (df ≫ 100), the chi-square distribution approaches the normal distribution.

- The chi-square distribution is used for confidence estimation for the variance of a normally distributed population.

Relationship to Rayleigh distribution

The amount

Relationship to log normal distribution

If the random variable is

The emergence of a logarithmic normal distribution is due to multiplicative, that of a normal distribution to additive interaction of many random variables.

Relationship to F-Distribution

If the stochastically independent and identically-normally distributed random variables

then the random variable is subject to

of an F-distribution with

Relationship to student's t-distribution

If the independent random variables

with sample mean

For an increasing number of degrees of freedom, the student t-distribution approaches the normal distribution more and more closely. As a rule of thumb, from approx.

The student t-distribution is used for confidence estimation for the expected value of a normally distributed random variable with unknown variance.

Calculating with the standard normal distribution

In tasks where the probability for μ

used to generate an

The probability for the event that, for example, ![[x,y]](https://www.alegsaonline.com/image/1b7bd6292c6023626c6358bfd3943a031b27d663.svg)

Basic questions

In general, the distribution function gives the area under the bell curve up to the value

This corresponds to a searched probability in tasks, where the random variable is

Analogous applies to "larger" and "not smaller".

Because

- In a random experiment, what is the probability that the standard normally distributed random variable takes on

at most the value ?

In school mathematics, the term left spike is occasionally used for this statement, since the area under the Gaussian curve runs from the left to the boundary. For

of the "left tip", however, this does not represent a restriction.

- What is the probability that in a random experiment the standard normally distributed random variable

takes

at least the value ?

Here, the term right spike is occasionally used, with

there is also a negativity rule here.

Since any random variable

Scattering range and antistray range

Often the probability for a scatter range is of interest, i.e. the probability that the standard normally distributed random variable

In the special case of the symmetric scattering range (

For the corresponding antistray range, the probability that the standard normally distributed random variable

Thus, for a symmetrical antistray region, the following follows

Scattering areas using the example of quality assurance

Both scatter ranges are of particular importance, for example, in the quality assurance of technical or economic production processes. Here there are tolerance limits to be observed

If specified ![{\displaystyle [x_{1};x_{2}]=[\mu -\epsilon ;\mu +\epsilon ]}](https://www.alegsaonline.com/image/6722a4fa0e23c0a1461b65d075ac5d47fb9bab7e.svg)

In the case of the scattering range applies:

The antistray area is then given by

or if no scatter range was calculated by

Thus, the result γ

If it is known that the maximum deviation

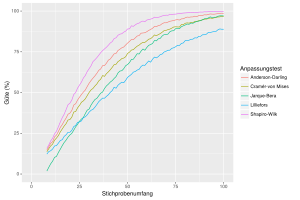

Testing for normal distribution

To check whether data are normally distributed, the following methods and tests can be used, among others:

- Chi-square test

- Kolmogorov-Smirnov test

- Anderson-Darling test (modification of the Kolmogorov-Smirnov test)

- Lilliefors test (modification of the Kolmogorov-Smirnov test)

- Cramér-von Mises test

- Shapiro-Wilk test

- Jarque-Bera test

- Q-Q plot (descriptive review)

- Maximum likelihood method (descriptive test)

The tests have different properties in terms of the type of deviations from the normal distribution that they detect. For example, the Kolmogorov-Smirnov test is more likely to detect deviations in the center of the distribution than deviations at the edges, while the Jarque-Bera test is quite sensitive to strongly deviating individual values at the edges ("heavy edges").

In contrast to the Kolmogorov-Smirnov test, the Lilliefors test does not require standardization, i.e., μ

With the help of quantile-quantile diagrams or normal-quantile diagrams, a simple graphical check for normal distribution is possible.

With the maximum likelihood method, the parameters μ

A χ²-distributed random variable with 5 degrees of freedom is tested for normal distribution. For each sample size, 10,000 samples are simulated and then 5 goodness-of-fit tests are performed at each 5% level.

Quantiles of a normal distribution and a chi-square distribution

Parameter estimation, confidence intervals and tests

→ Main article: Normal distribution model

Many of the statistical problems in which the normal distribution occurs have been well studied. The most important case is the so-called normal distribution model, in which one assumes the performance of

- the expected value is unknown and the variance is known

- the variance is unknown and the expected value is known

- Expected value and variance are unknown.

Depending on which of these cases occurs, different estimators, confidence intervals or tests result. These are summarized in detail in the main article Normal Distribution Model.

The following estimators are of particular importance:

- The sample means

is an expectation-true estimator for the unknown expected value for both the case of a known and an unknown variance. It is even the best expectation-true estimator, i.e., the estimator with the smallest variance. Both the maximum likelihood method and the method of moments provide the sample mean as an estimator.

- The uncorrected sample variance

is an expectation-trusted estimator for the unknown variance given an expected value μ

- The corrected sample variance

is an expectation-true estimator for the unknown variance with unknown expected value.

Applications outside the probability calculus

The normal distribution can also be used to describe facts that are not directly stochastic, for example in physics for the amplitude profile of Gaussian beams and other distribution profiles.

In addition, it is used in the Gabor transformation.

See also

- Additive white Gaussian noise

- Linear regression

Questions and Answers

Q: What is the normal distribution?

A: The normal distribution is a probability distribution that is very important in many fields of science.

Q: Who discovered the normal distribution?

A: The normal distribution was first discovered by Carl Friedrich Gauss.

Q: What do location and scale parameters in normal distributions represent?

A: The mean ("average") of the distribution defines its location, and the standard deviation ("variability") defines the scale of normal distributions.

Q: How are the location and scale parameters of normal distributions represented?

A: The mean and standard deviation of normal distributions are represented by the symbols μ and σ, respectively.

Q: What is the standard normal distribution?

A: The standard normal distribution (also known as the Z distribution) is the normal distribution with a mean of zero and a standard deviation of one.

Q: Why is the standard normal distribution often called the bell curve?

A: The standard normal distribution is often called the bell curve because the graph of its probability density looks like a bell.

Q: Why do many values follow a normal distribution?

A: Many values follow a normal distribution because of the central limit theorem, which says that if an event is the sum of identical but random events, it will be normally distributed.

Search within the encyclopedia