Newton's method

The Newton method, also Newton-Raphson method (named after Sir Isaac Newton 1669 and Joseph Raphson 1690), is a commonly used approximation algorithm in mathematics for the numerical solution of nonlinear equations and systems of equations. In the case of an equation with one variable, given a continuously differentiable function

Formally expressed, starting from an initial value

repeated until sufficient accuracy is achieved.

Newton method for real functions of a variable

Historical facts about the Newton method

Isaac Newton wrote in the period 1664 to 1671 the work "Methodus fluxionum et serierum infinitarum" (Latin for: Of the method of fluxions and infinite sequences). In it he explains a new algorithm for solving a polynomial equation using the example

According to the binomial formulae

Since

Joseph Raphson described this computational process formally in 1690 in the work "Analysis Aequationum universalis" and illustrated the formalism on the general equation of the third degree, finding the following iteration rule.

The abstract form of the method using the derivative

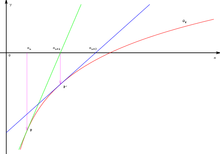

graph construction

Descriptively, one arrives at this procedure as follows: Let

The tangent is

We choose as

Applying this construction several times, we obtain from a first digit

is defined. This prescription is also called Newton iteration, the function

The art of using Newton's method is to find suitable initial values . The

Many nonlinear equations have multiple solutions, so a polynomial of

First example

The square root of a number

The advantage of this rule over Heron's root extraction (see below) is that it is division-free once the reciprocal of

| n |

|

|

|

| 0 | | | |

| 1 | | | |

| 2 | | | |

| 3 | | | |

| 4 | | | |

| 5 | | | |

| 6 | | | |

| 7 | | | |

| 8 | | | |

If we consider the difference

According to the inequality from the arithmetic and geometric mean,

Animation: Iteration with the Newtonian method

Convergence Considerations

The Newton method is a so-called locally convergent method. Convergence of the sequence generated in the Newton iteration to a zero is therefore only guaranteed if the starting value, i.e. the 0-th member of the sequence, is already "sufficiently close" to the zero. If the starting value is too far away, the convergence behavior is not fixed, i.e., both a divergence of the sequence and an oscillation (in which finitely many function values alternate) or a convergence to another zero of the function under consideration are possible.

If the starting value

However, if there is ![{\displaystyle I={]a,b[}}](https://www.alegsaonline.com/image/0b87023fb36a814cff30f5ba8d17d80e852fc0b9.svg)

Examples of non-convergence

- Oscillatory behavior results, among others, for the polynomial

with

. The point

with

and f^{\prime

is

mapped by the Newton operator to the point the point

in turn, with

and

, is

mapped to , so Newton iteration with one of these points as the initial value yields a periodic sequence, these two points alternate cyclically. This cycle is stable, it forms an attractor of the Newton iteration. This means that there are environments around both points, so that starting points from these environments converge against the cycle and thus have one each of the points 0 and 1 as the limit of the subsequence with even index and that with odd index.

- Divergence or arbitrary distance from the starting point results for

with

and

. There exists a place

with

i.e.

One convinces oneself that then

holds. This behavior is not stable, because when the initial value is slightly varied, such as by numerical computation, the Newton iteration moves further and further away from the ideal divergent sequence. Even with eventual convergence, the zero found will be very far from the initial value.

Local quadratic convergence

Let be

It is now rearranged so that the Newton operator appears on the right-hand side,

Let

Let

Thus, if the starting point of the iteration can be guaranteed to be the estimate

The convergence rate following from these estimates is called quadratic, the (logarithmic) precision or number of valid digits doubles in each step. The estimation of the distance

, if the length of the interval

is less than

This gives an estimate of the valid digits in the binary system.

, if the length of the interval

is less than

, i.e., close enough to zero results in a doubling of the valid decimal places in each step.

Local quadratic convergence with multiple zeros by modification

In the case that

If

Then by the product rule

If we now insert this into the iteration, we get

and from it, after bilateral subtraction of ,

![(x_{\text{neu}}-a)=(x-a)-(x-a){\frac {g(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}=(x-a)\left[1-{\frac {g(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}\right]](https://www.alegsaonline.com/image/0edb3af9242a98e29c32a4f3d94546f15a71111d.svg)

Now when the expression

Therefore one can estimate the multiplicity of the zero by the convergence behaviour, if one does not know it for other reasons, and - as now still described - optimize the procedure.

For a

Thus

Now if

where the right factor converges to a fixed value because of

An example shows the convergence behavior very nicely. The function

| n |

| Error |

| Error |

| Error |

| 0 | | | | | | |

| 1 | | | | | | |

| 2 | | | | | | |

| 3 | | | | | | |

| 4 | | | | | | |

| 5 | | | | | | |

| 6 | | | | | | |

| 7 | | | | | | |

| 8 | | | | | | |

| 9 | | | | | | |

| 10 | | | | | | |

The Newton method for complex numbers

For complex numbers

with the holomorphic function

The complex derivative is independent of the direction of the derivative at the point z i.e. it is valid

Therefore the Cauchy-Riemann differential equations are valid

The complex equation (1) can be decomposed into real and imaginary parts:

With the help of (2) it follows

The geometric meaning of this equation is seen as follows. One determines the minimum of the magnitude

One introduces the term

This is identical to formula (1).

Comments

- The local convergence proof can also be done in the same way in the multidimensional case, but it is then technically a bit more difficult since two- and three-level tensors are used for the first and second derivatives, respectively. Essentially, the constant K is given by

be replaced with appropriate induced operator norms.

- The local convergence proof assumes that an interval containing a zero is known. From his proof, however, there is no way to test this quickly. A proof of convergence which also provides a criterion for this was first given by Leonid Kantorovich and is known as Kantorovich's theorem.

- To find a suitable starting point, one sometimes uses other ("coarser") methods. For example, one can determine an approximate solution with the gradient method and then refine it with the Newton method.

- If the starting point is unknown, one can use a homotopy to deform the function

of which one is looking for a zero, to a simpler function

of which (at least) one zero is known. One then runs through the deformation backwards in the form of a finite sequence of only "slightly" differing functions. From the first function

one knows one zero. As starting value of the Newton iteration to the just current function of the sequence one uses the approximation of a zero of the function preceding in the sequence. For the exact procedure see homotopy method.

As an example may serve the "flooding homotopy": with an arbitrary

The Newtonian approximation method .

Newton's method for the polynomial

Dynamics of Newton's method for the function

Questions and Answers

Q: What is Newton's method?

A: Newton's method is an algorithm for finding the real zeros of a function. It uses the derivative of the function to calculate its roots, and requires an initial guess value for the location of the zero.

Q: Who developed this method?

A: The method was developed by Sir Isaac Newton and Joseph Raphson, hence it is sometimes called the Newton–Raphson method.

Q: How does this algorithm work?

A: This algorithm works by repeatedly applying a formula which takes in an initial guess value (xn) and calculates a new guess (xn+1). By repeating this process, the guesses will approach a zero of the function.

Q: What is required to use this algorithm?

A: To use this algorithm, you must have an initial "guess value" for the location of the zero as well as knowledge about the derivative of your given function.

Q: How can we explain Newton's Method graphically?

A: We can explain Newton's Method graphically by looking at intersections between tangent lines with x-axis. First, a line tangent to f at xn is calculated. Next, we find intersection between this tangent line and x-axis and record its x-position as our next guess - xn+1.

Q: Is there any limitation when using Newton's Method?

A: Yes, if your initial guess value is too far away from actual root then it may take longer time or even fail to converge towards root due to oscillations around it or divergence away from it.

Search within the encyclopedia

![{\displaystyle {\begin{aligned}(x_{\text{neu}}-a)&=(x-a)\left[1-{\frac {k\cdot g(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}\right]\\&=(x-a)\left[{\frac {k\cdot g(x)+(x-a)\cdot g'(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}-{\frac {k\cdot g(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}\right]\\&=(x-a){\frac {(x-a)\cdot g'(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}\\&=(x-a)^{2}{\frac {g'(x)}{k\cdot g(x)+(x-a)\cdot g'(x)}}\end{aligned}}}](https://www.alegsaonline.com/image/5f1c38c84e7d192079e376bbf7e046389222342a.svg)