Linear map

A linear mapping (also called linear transformation or vector space homomorphism) is an important type of mapping between two vector spaces over the same body in linear algebra. In a linear mapping, it is irrelevant whether one first adds two vectors and then maps their sum, or first maps the vectors and then forms the sum of the images. The same applies to the multiplication with a scalar from the basic body.

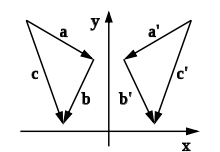

The illustrated example of a reflection on the Y-axis illustrates this. The vector

It is then said that a linear mapping is compatible with the links vector addition and scalar multiplication. Thus, the linear mapping is a homomorphism (structure-preserving mapping) between vector spaces.

In functional analysis, when considering infinite-dimensional vector spaces carrying a topology, one usually speaks of linear operators instead of linear mappings. Formally, the terms are synonymous. However, for infinite-dimensional vector spaces the question of continuity is significant, while continuity always exists for linear mappings between finite-dimensional real vector spaces (each with the Euclidean norm) or, more generally, between finite-dimensional Hausdorff topological vector spaces.

Axis mirroring as an example of a linear mapping

Definition

Let

is homogeneous:

is additive:

The two conditions above can also be combined:

For

Explanation

A mapping is linear if it is compatible with the vector space structure. That is, linear mappings are compatible with both the underlying addition and scalar multiplication of the domain of definitions and values. Compatibility with addition means that the linear mapping

This implication can be shortened by

After substituting the premise

·

Visualization of compatibility with vector addition: each vector given by

·

For mappings that are not compatible with addition, there are vectors

·

Visualization of compatibility with scalar multiplication: any scaling λ

·

If a mapping is not compatible with scalar multiplication, then there is a scalar λ

Examples

- For any linear mapping has the form

with

.

- Let

and

. Then, for each

-matrix

using matrix multiplication, we obtain a linear mapping

by

defined. Any linear mapping fromto

can be represented in this way.

- If

an open interval,

the

-vector space of continuously differentiable functions on

and

of

-vector space of continuous functions on

, then the mapping

,

,

which assigns to

each functionits derivative, linear. The same holds for other linear differential operators.

·

The stretch

·

This mapping is additive: it doesn't matter if you first add vectors and then map them, or if you first map the vectors and then add them:

·

This mapping is homogeneous: it does not matter whether you first scale a vector and then map it, or whether you first map the vector and then scale it:

Image and core

Two sets important in considering linear mappings are the image and the kernel of a linear mapping

- The image

the mapping is the set of image vectors under

, that is, the set of all

with

from

. Therefore, the image set is also

notated by The image is a subvector space of

.

- The kernel

the mapping is the set of vectors from

, which are

mapped by

to the zero vector of It is a subvector space of

. The mapping

is injective exactly if the kernel contains only the zero vector.

Properties

- A linear mapping between the vector spaces

and

maps the zero vector of

to the zero vector of

, because

- A relation between kernel and image of a linear mapping

is described by the homomorphism : The factor space

is isomorphic to the image

.

Linear mappings between finite dimensional vector spaces.

Base

A linear mapping between finite-dimensional vector spaces is uniquely determined by the images of the vectors of a basis. If the vectors form

Here are

The mapping

If one assigns to each element

Representing the image vectors

Mapping matrix

→ Main article: Mapping matrix

If

The

Thus, in the

Using this matrix, one can

Thus, for the coordinates

This can be expressed using matrix multiplication:

The matrix

![_{B'}[f]_B](https://www.alegsaonline.com/image/062cd3a0f31d7327c7da18cc418a016a9c4a0279.svg)

Dimension formula

→ Main article: Rank set

Image and core are related by the dimension theorem. This states that the dimension of

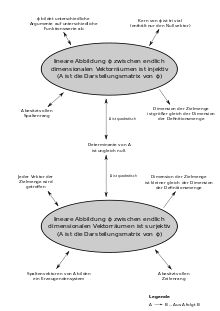

Summary of the properties of injective and surjective linear mappings

Linear mappings between infinite-dimensional vector spaces.

→ Main article: Linear operator

Especially in functional analysis one considers linear mappings between infinite-dimensional vector spaces. In this context, the linear mappings are usually called linear operators. The considered vector spaces usually carry the additional structure of a normalized complete vector space. Such vector spaces are called Banach spaces. In contrast to the finite dimensional case, it is not sufficient to study linear operators only on one basis. According to the Bairean category theorem, a basis of an infinite-dimensional Banach space has overcountably many elements and the existence of such a basis cannot be justified constructively, that is, only by using the axiom of selection. Therefore, one uses a different notion of bases, such as orthonormal bases or, more generally, Schauder bases. With this, certain operators such as Hilbert-Schmidt operators can be represented with the help of "infinite matrices", in which case infinite linear combinations must also be allowed.

Special linear mappings

Monomorphism

A monomorphism between vector spaces is a linear mapping

Epimorphism

An epimorphism between vector spaces is a linear mapping

Isomorphism

An isomorphism between vector spaces is a linear mapping

Endomorphism

An endomorphism between vector spaces is a linear mapping where the spaces

Automorphism

An automorphism between vector spaces is a bijective linear mapping where the spaces

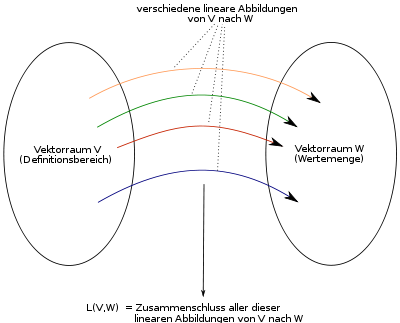

Vector space of linear mappings

The set

is again a linear mapping and that the product

of a linear mapping with a scalar λ

If

into the matrix space

If we consider the set of linear self-mappings of a vector space, i.e. the special case

Formation of the vector space L(V,W)

Generalization

A linear mapping is a special case of an affine mapping.

Replacing the body by a ring in the definition of the linear mapping between vector spaces, we obtain a moduli homomorphism.

Search within the encyclopedia