Linear independence

In linear algebra, a family of vectors of a vector space is called linearly independent if the zero vector can only be generated by a linear combination of the vectors in which all coefficients of the combination are set to the value zero. Equivalently (unless the family consists only of the zero vector), none of the vectors can be represented as a linear combination of the other vectors in the family.

Otherwise they are called linearly dependent. In this case, at least one of the vectors (but not necessarily each) can be represented as a linear combination of the others.

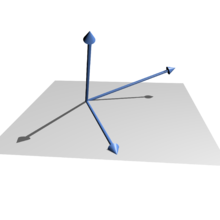

For example, in the three-dimensional Euclidean space

Linear independent vectors in ℝ3

Linear dependent vectors in a plane in ℝ3

Definition

Let

A finite family

with coefficients

The family

The zero vector

The term is also used for subsets of a vector space: A subset

Other characterisations and simple properties

- The vectors

are (unless

and

) are linearly independent exactly when none of them can be represented as a linear combination of the others.

This statement does not apply in the more general context of moduli over rings.

- A variant of this statement is the dependence lemma: If

are linearly independent and

linearly dependent, then

as a linear combination of

.

- If a family of vectors is linearly independent, then each subfamily of this family is also linearly independent. If, on the other hand, a family is linearly dependent, then every family that contains this dependent family is also linearly dependent.

- Elementary transformations of the vectors do not change the linear dependence or the linear independence.

- If the zero vector is one of the

(here: let

), they are linearly dependent - the zero vector can be generated by

setting all except for

which, as a coefficient of the zero vector

may be arbitrary (i.e. in particular also non-zero).

- In a

-dimensional space, a family of more than

vectors is always linearly dependent (see barrier lemma).

Determination by means of determinant

If one has given

Basis of a vector space

→ Main article: Basis (vector space)

The concept of linearly independent vectors plays an important role in the definition and handling of vector space bases. A base of a vector space is a linearly independent generating system. Bases make it possible to calculate with coordinates, especially for finite-dimensional vector spaces.

Examples

Single vector

For it follows from the definition of the vector space that if

can only be

Vectors in the plane

The vectors

Proof: For

i.e.

Then applies

so

This system of equations is only valid for the solution

Standard basis in n-dimensional space

In the vector space

Then the vector family

Proof: For

But then also

and it follows that

Functions as vectors

Let be

Proof: Let

for all

By subtracting the first equation from the second equation, we obtain

Since this equation must hold

It follows again that (for

Since the first equation is only solvable for

See also: Wronski determinant

Rows

Let be

but nevertheless are

Rows and columns of a matrix

Another interesting question is whether the rows of a matrix are linearly independent or not. Here, the rows are regarded as vectors. If the rows of a square matrix are linearly independent, the matrix is called regular, otherwise singular. The columns of a square matrix are linearly independent exactly when the rows are linearly independent. Example of a sequence of regular matrices: Hilbert matrix.

Rational independence

Real numbers that are linearly independent over the rational numbers as coefficients are called rationally independent or incommensurable. The numbers

Generalisations

The definition of linearly independent vectors can be applied analogously to elements of a module. In this context, linearly independent families are also called free (see also: free module).

The notion of linear independence can be further generalised to a consideration of independent sets, see Matroid.

Search within the encyclopedia