Intelligence quotient

![]()

This article deals with the differential psychological concept of intelligence quotient. For general information on intelligence, see Intelligence; for criticism of this concept, see Criticism of the concept of intelligence.

The intelligence quotient (IQ) is a parameter determined by an intelligence test to assess intellectual ability in general (general intelligence) or within a specific range (e.g. factors of intelligence) in comparison with a reference group. It always refers to the respective test, because there is no scientifically recognised, unambiguous definition of intelligence.

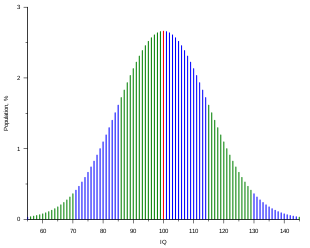

The "population-representative" reference group can be age- or school class-specific (especially for children and adolescents) or specific to educational levels (for example, high school students or occupational groups) (compare normalization). In today's tests that use an IQ norm, the distribution of the test results of a sufficiently large sample is used to determine the norm value, assuming a normal distribution of intelligence, usually by means of normal rank transformation and converted into a scale with a mean of 100 and a standard deviation of 15. According to a normal distribution, about 68% of the persons in this reference group have an IQ in the so-called middle range (at which the greatest probability mass of the density function is located) between 85 and 115.

Differences result from the representativeness of the normalization sample for this reference group (size of the sample, representativeness in obtaining the persons). When interpreting IQ, attention must be paid to the type of measurement procedure (e.g. type of intelligence test) and the concept of intelligence behind it, as well as the reference group used for standardization, which influence the stability and generalizability of the estimate of a person's intelligence. Norms must also be examined with regard to stability over time and reassessed if they become obsolete.

Among other fields, cognitive science also deals with the measurement of intelligence.

IQ tests are constructed so that the results are approximately normally distributed for a sufficiently large population sample. Color-coded ranges each correspond to one standard deviation.

Calculations

Historical

Alfred Binet, who developed the first useful intelligence test in 1905 with the Binet-Simon test, specified mental ability as the age of intelligence. The test consisted of tasks with increasing difficulty, which should be solvable as clearly as possible for the respective age groups. Example: An averagely developed eight-year-old should be able to solve all tasks of his age group (and below), but not the tasks of nine-year-olds. If a child did not manage all the tasks of its age group, it had a lower, if it also managed tasks of the higher age group, it had a higher "intelligence age".

William Stern put this age of intelligence in relation to age and thus invented the intelligence quotient at the University of Breslau in 1912.

Lewis M. Terman of Stanford University further developed the Simon and Kinte quotient translated by Goddard from French into English. To remove the decimal places, he multiplied the intelligence age-life quotient by 100.

Modern

Since the age of intelligence increases more slowly than the age of life, the IQ according to Stern's formula consistently decreases. Terman also recognized this problem in his further development. To address this problem, he normalized the test for different age groups. He adjusted the distribution to a normal distribution for each age. In the Stanford-Binet test developed in 1937, the standard deviation varies between 15 and 16 IQ points depending on age (cf. Valencia and Suzuki, 2000, p. 5 ff.).

The IQ calculation originally developed only for children, specifically for school readiness tests, was later extended to adults by David Wechsler by applying population-based scaling with a mean of 100. Today's deviance IQ scale has a mean of 100 and a standard deviation (SD) of 15. It is used, for example, in the Hamburg-Wechsler intelligence test series. Since IQ is overestimated in the public as a "label" of persons, for example, in terms of stability and universality, some tests deliberately use other norm scales.

Conversions

It is also possible to specify other norm scales, such as percentile rank. By referring to the normal distribution, values from other scales can be converted into an IQ scale with a mean value of 100 without loss of information:

(See also Standardization (Statistics)). Thereby mean

determined scale value in the test used

mean value of the scale used

standard deviation of the used scale

Example

The IST-2000 uses what is known as the Standard Score (SW) scale, which has a mean of 100 and a standard deviation of 10. If a person has scored a standard score of 110, this can be converted to a standard IQ score as follows:

Inserted:

Since 110 of the standard value scale is exactly one standard deviation above the mean, the same must apply to the AW-IQ value. And, as calculated, this is also true with a value of 115. An AW-IQ value of 85, which is exactly one standard deviation below the mean, corresponds to an ACTUAL value of 90 (see also Linear Transformation).

Alfred Binet

Reliability and measurement error

When interpreting results, the measurement error and the error probability to be accepted must be taken into account (cf. error of the first kind). The probability of error determines the length of the confidence interval. The latter is influenced by the necessary certainty of the diagnostic decision to be made.

In 2014, the US Supreme Court had to decide up to what IQ an offender should be considered insane and therefore not be executed in the case of a death sentence. In this case, the American Psychological Association and the American Association on Intellectual and Developmental Disabilities argued that IQ tests have a margin of error of 10 points up and down.

Intelligence tests must be periodically rescaled (or the validity of the norms checked) to keep the average score at 100. Until the 1990s, IQ tests in industrialized countries had shown a steadily rising average. There is no consensus on the cause of this "Flynn effect," such as a more evenly distributed schooling or the informative influence of mass media. The numerical values are not comparable without knowledge of the underlying test and its normalization. The guideline value for the test is eight years, which is suggested in DIN 33430 for the field of aptitude testing.

Questions and Answers

Q: What is an intelligence quotient (IQ)?

A: An intelligence quotient (IQ) is a number which is the result of a standard test to measure intelligence.

Q: Who developed the idea of measuring intelligence?

A: The idea of measuring intelligence was developed by British scientist Francis Galton in his book Hereditary genius published in the late 19th century.

Q: How does IQ measure a person's score?

A: IQ measures a person's score comparatively, telling how much above or below average they are.

Q: What modern IQ test is used today?

A: The Wechsler Adult Intelligence Scale is one modern IQ test used today. It states where the subject's score falls on the Gaussian bell curve with a center value of 100 and standard deviation of 15.

Q: What other aspects can be predicted from an IQ score?

A: An IQ score can predict other aspects such as onset of dementia and Alzheimer’s disease, social status, and educational achievement or special needs up to 11 years later.

Q: To what extent is IQ inherited?

A: There is still disagreement about to what extent IQ is inherited; some believe it depends on both genetics and environment while others disagree.

Q: How have average IQ scores changed over time?

A: Average IQ scores for many populations have been rising about three points per decade since the early 20th century due to something called the Flynn effect.

Search within the encyclopedia