Differential calculus

The differential or differential calculus is an essential part of the analysis and thus a field of mathematics. The central topic of differential calculus is the calculation of local changes of functions. While a function assigns certain output values to its input values according to a tabular principle, the differential calculus determines how much the output values change after very small changes in the input values. It is closely related to the integral calculus, with which it is jointly summarized under the name infinitesimal calculus.

The derivative of a function serves the investigation of local changes of a function and is at the same time basic term of the differential calculus. Instead of the derivative, one also speaks of the differential quotient, whose geometrical equivalent is the tangent slope. According to Leibniz, the derivative is the proportionality factor between infinitesimal changes of the input value and the resulting, also infinitesimal changes of the function value. For example, if after increasing the input by a very small unit, the output of the function is increased by nearly two units, the derivative is assumed to be of value 2 (= 2 units / 1 unit). A function is called differentiable if such a proportionality factor exists. Equivalently, the derivative at a point is defined as the slope of that linear function which, among all linear functions, best approximates locally the change of the function at the point under consideration. Accordingly, the derivative is also called the linearization of the function. The linearization of a possibly complicated function to determine its rate of change has the advantage that linear functions have particularly simple properties.

In many cases, the differential calculus is an indispensable tool for the formation of mathematical models, which should represent reality as accurately as possible, as well as for their subsequent analysis. The equivalent of the derivative in the investigated facts is often the instantaneous rate of change. For example, the derivative of the location or displacement-time function of a particle with respect to time is its instantaneous velocity, and the derivative of the instantaneous velocity with respect to time provides the instantaneous acceleration. In economics, one also often speaks of marginal rates instead of the derivative, for example marginal cost or marginal productivity of a production factor.

In geometric language, the derivative is a generalized slope. The geometric term slope is originally defined only for linear functions whose function graph is a straight line. The derivative of any function at a point

In arithmetic, the derivative of a function

Graph of a function (blue) and a tangent to the graph (red). The slope of the tangent is the derivative of the function at the marked point.

Introduction

Introduction by means of an example

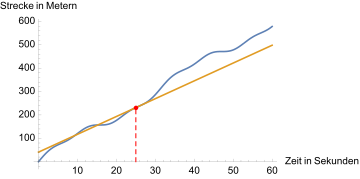

If a car is driving on a road, this fact can be used to create a table in which the distance covered since the start of the recording is entered at each point in time. In practice, it is useful not to keep such a table too close-meshed, i.e., for example, to make a new entry only every 3 seconds in a period of 1 minute, which would require only 20 measurements. However, such a table can theoretically be made arbitrarily close-meshed, if every point in time is to be taken into account. In this case, the previously discrete data, i.e. data with a distance, merge into a continuum. The present is then interpreted as a point in time, i.e. as an infinitely short period of time. At the same time, however, the car has covered a theoretically measurable exact distance at every point in time, and if it does not slow down to a standstill or even reverse, the distance will increase continuously, i.e. it will never be the same at any point in time as at another.

·

Exemplary representation of a table, every 3 seconds a new measurement is entered. Under such conditions, only average velocities can be calculated in the periods 0 to 3, 3 to 6 etc. seconds can be calculated. Since the distance covered always increases, the car seems to move only forward.

·

Potential transition to an arbitrarily close-meshed table, which takes the form of a curve after all points have been entered. Now a distance

is assigned to each time point between 0 and 60 seconds. Regions, within which the curve runs more steeply upwards, correspond to time periods, in which a larger number of meters per time unit is covered. In regions with an almost constant number of meters, for example in the range 15-20 seconds, the car drives slowly and the curve runs flat.

The motivation behind the notion of deriving a time-distance table or function is to now be able to specify how fast the car is moving at a certain present time. From a time-stretch table the appropriate time-speed table is to be derived. The background is that speed is a measure of how much the distance traveled changes over time. If the speed is high, a strong increase in the distance can be seen, while a low speed leads to little change. Since each time point has also been assigned a distance, such an analysis should in principle be possible, because with the knowledge of the distance traveled

Thus, if

The differences in numerator and denominator have to be formed, since one is only interested in the distance

If, on the other hand, we want to move on to a "perfectly fitting" time-velocity table, the term "average velocity in a time interval" must be replaced by "velocity at a point in time". To do this, a time

exactly the same velocities. Now there are two possibilities when studying the velocities. Either, they do not show any tendency to approach a certain finite value in the considered limit process. In this case, no velocity valid at time

and expresses exactly the

The principle of differential calculus

The example of the last section is particularly simple if the increase of the distance of the car with time is uniform, i.e. linear. In this case, one also speaks of a proportionality between time and distance, if at the beginning of the recording (

For the more general case, replacing 2 by any number

In general, the car moves forward in

It can therefore be stated:

- Linear functions. For linear functions (note that it does not have to be an origin line), the derivative term is explained as follows. If the function under consideration has the form

then the instantaneous rate of change at each point has the value

, so it is true for the corresponding derivative function

. Thus, the derivative can be read directly from the data

In particular, every constant function

has the derivative

, since changing the input values does not change the output value. The measure of change is therefore 0 everywhere.

Sometimes it can be much more difficult if a movement is not uniform. In this case, the course of the time-stretch function may look completely different from a straight line. From the nature of the time-stretch function, it can then be seen that the car's motion trajectories are very varied, which may have to do with traffic lights, curves, traffic jams and other road users, for example. Since such types of progressions are particularly common in practice, it is convenient to extend the derivation notion to non-linear functions as well. Here, however, one quickly encounters the problem that, at first glance, there is no clear proportionality factor that precisely expresses the local rate of change. Therefore, the only possible strategy is to linearize the nonlinear function to reduce the problem to the simple case of a linear function. This technique of linearization forms the very calculus of differential calculus and is of very great importance in calculus, since it helps to reduce complicated processes locally to very easily understood processes, namely linear processes.

The strategy can be exemplified by the non-linear function

| | 0,5 | 0,75 | 0,99 | 0,999 | 1 | 1,001 | 1,01 | 1,1 | 2 | 3 | 4 | 100 |

| | 0,25 | 0,5625 | 0,9801 | 0,998001 | 1 | 1,002001 | 1,0201 | 1,21 | 4 | 9 | 16 | 10000 |

| | 0 | 0,5 | 0,98 | 0,998 | 1 | 1,002 | 1,02 | 1,2 | 3 | 5 | 7 | 199 |

That the linearization is only a local phenomenon is shown by the increasing deviation of the function values at more distant input values. The linear function

It can therefore be stated:

- Non-linear functions. If the instantaneous rate of change of a non-linear function is to be determined at a certain point, it must be linearized there (if possible). Then the slope of the approximate linear function is the local rate of change of the non-linear function under consideration, and the same view applies as for derivatives of linear functions. In particular, the rates of change of a non-linear function are not constant, but will change from point to point.

The exact determination of the correct linearization of a non-linear function at a given point is the central task of the calculus of differential calculus. The question is whether it is possible to calculate from a curve such as

The starting point is the explicit determination of the limit value of the differential quotient

from which for very small h by simple transformation the expression

emerges. The right-hand side is a function linear

Exemplary: If

where the gradient of

Exemplary calculation of the derivative

The approach to the derivative calculation is first the difference quotient. This can be demonstrated by the functions

In the case of the binomial formula

In the last step the term

Another important type of function is exponential functions, such as

which is based on the principle that the product of

This triggers the important (and for exponential functions peculiar) effect that the derivative function must correspond to the derived function except for one factor:

The factor, except for which function and derivative are equal, is the derivative at the point 0. Strictly speaking, it must be verified that this exists at all. If so, is

The calculation rules are described in detail in the section Derivation Calculation.

Classification of the application possibilities

Extreme value problems

→ Main article: Extreme value problem

An important application of the differential calculus is that the derivative can be used to determine local extreme values of a curve. So instead of having to search mechanically for high or low points using a table of values, the calculus provides a direct answer in some cases. If there is a high or low point, the curve has no "real" slope at this point, which is why the optimal linearization has a slope of 0. For the exact classification of an extreme value, however, further local data of the curve are necessary, because a slope of 0 is not sufficient for the existence of an extreme value (let alone a high or low point).

In practice, extreme value problems typically occur when processes, for example in the economy, are to be optimized. Often there are unfavorable results at the marginal values, but in the direction of the "middle" there is a steady increase, which then has to be maximized somewhere. For example, the optimal choice of a sales price: If the price is too low, the demand for a product is very high, but the production cannot be financed. On the other hand, if it is too high, in extreme cases it will not be bought at all. Therefore, an optimum lies somewhere "in the middle". The prerequisite for this is that the relationship can be represented in the form of a (continuously) differentiable function.

The examination of a function for extreme points is part of a curve discussion. The mathematical background is provided in the section Application of higher derivatives.

Mathematical modeling

In mathematical modeling, complex problems are to be captured and analyzed in mathematical language. Depending on the problem, the investigation of correlations or causalities or also the giving of prognoses are target-oriented within the framework of this model.

Especially in the environment of so-called differential equations, the differential calculus is a central tool for modeling. These equations occur, for example, when there is a causal relationship between the stock of a quantity and its change over time. An everyday example could be:

The more inhabitants a city has, the more people want to move there.

More concretely, this could mean, for example, that with

With the help of differential calculus, a model can be derived from such a causal relationship between stock and change in many cases, which resolves the complex relationship in the sense that a stock function can be explicitly specified at the end. If, for example, the value 10 years is then inserted into this function, the result is a forecast for the number of city residents in 2030. In the case of the upper model, a stock function

with the natural exponential function (natural means that the proportionality factor between stock and change is simply equal to 1), and for 2030 the estimated forecast is

Numerical methods

The property of a function to be differentiable is advantageous in many applications, since this gives the function more structure. One example is solving equations. In some mathematical applications, it is necessary to find the value of one (or more) unknown

Another advantage of differential calculus is that in many cases complicated functions, such as roots or even sine and cosine, can be well approximated using simple calculation rules such as addition and multiplication. If the function is easy to evaluate at an adjacent value, this is of great benefit. For example, if an approximation for the number

because it is proved that

Pure mathematics

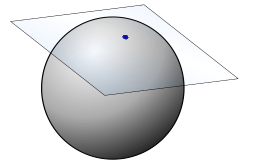

Differential calculus also plays an important role in pure mathematics as a core of calculus. An example is differential geometry, which deals with figures that have a differentiable surface (without kinks, etc.). For example, a plane can be placed tangentially on a spherical surface at any point. Illustratively, if you stand at a point on the earth, you will have the feeling that the earth is flat if you let your gaze wander in the tangential plane. In reality, however, the earth is only locally flat: The applied plane serves the simplified representation (by linearization) of the more complicated curvature. Globally it has a completely different shape as a spherical surface.

The methods of differential geometry are extremely important for theoretical physics. Thus, phenomena such as curvature or spacetime can be described by methods of differential calculus. Also the question, what is the shortest distance between two points on a curved surface (for example the earth's surface), can be formulated and often answered with these techniques.

Differential calculus has also proved its worth in the study of numbers as such, i.e. within the framework of number theory, in analytic number theory. The basic idea of analytic number theory is to transform certain numbers about which one wants to learn something into functions. If these functions have "good properties" such as differentiability, one hopes to be able to draw conclusions about the original numbers via the structures that accompany them. It has often proven useful to move from real to complex numbers in order to perfect analysis (see also complex analysis), i.e. to study functions over a larger range of numbers. An example is the analysis of the Fibonacci numbers

i.e. of an "infinitely long" polynomial (a so-called power series) whose coefficients are exactly the Fibonacci numbers. For sufficiently small numbers

The denominator polynomial

with the golden ratio

The higher dimensional case

The differential calculus can be generalized to the case of "higher dimensional functions". This means that both input and output values of the function are not merely part of the one-dimensional real number ray, but also points of a higher-dimensional space. An example is the rule

between two-dimensional spaces in each case. The function understanding as a table remains identical here, only that this has "clearly more" entries with "four columns"

after itself. This then mimics the entire function

with which all "gradients" are gathered in a so-called matrix. This term is also called Jacobi matrix or functional matrix.

Example: If above

For example

and

In the very general case, if one has

Tangential plane placed at a point on a spherical surface

Diagram of the time-stretch function

At the time of 25 seconds, the car is currently moving at approx. 7.62 meters per second, converted to 27.43 km/h. This value corresponds to the slope of the tangent of the time-distance curve at the corresponding point. Further detailed explanations of this geometric interpretation are given below.

During speed checks, instantaneous speeds are strongly approximated

.jpg)

Moving objects, such as cars, can be assigned a time-distance function. In this function it is tabulated how far the car has moved at which point in time. The derivation of this function in turn tabulates which speeds the car has at which point in time, for example at the time the photo was taken.

History

→ Main article: Infinitesimal calculus#History of infinitesimal calculus.

The problem of differential calculus emerged as a tangent problem from the 17th century on. An obvious solution was to approximate the tangent to a curve by its secant over a finite (finite means here: greater than zero), but arbitrarily small interval. Thereby the technical difficulty had to be overcome to calculate with such an infinitesimally small interval width. The first beginnings of the differential calculus go back to Pierre de Fermat. Around 1628 he developed a method to determine extreme points of algebraic terms and to calculate tangents to conic sections and other curves. His "method" was purely algebraic. Fermat did not consider boundary crossings and certainly not derivatives. Nevertheless, his "method" can be interpreted and justified by modern means of analysis, and it has been shown to have inspired mathematicians such as Newton and Leibniz. A few years later, René Descartes chose a different algebraic approach by attaching a circle to a curve. This intersects the curve at two points close to each other; unless it touches the curve. This approach enabled him to determine the slope of the tangent line for special curves.

At the end of the 17th century, Isaac Newton and Gottfried Wilhelm Leibniz succeeded independently of each other in developing calculi that functioned without contradiction, using different approaches. While Newton approached the problem physically via the instantaneous velocity problem, Leibniz solved it geometrically via the tangent problem. Their work allowed abstraction from purely geometric notions and is therefore considered the beginning of calculus. They became known mainly through the book Analyse des Infiniment Petits pour l'Intelligence des Lignes Courbes by the nobleman Guillaume François Antoine, Marquis de L'Hospital, who took private lessons with Johann I Bernoulli and so published his research on calculus. It states:

"The scope of this calculus is immeasurable: it can be applied to mechanical as well as geometric curves; root signs cause it no difficulty and are often even pleasant to handle; it can be extended to as many variables as one could wish; the comparison of infinitely small quantities of all kinds succeeds effortlessly. And it permits an infinite number of surprising discoveries about curved as well as rectilinear tangents, questions De maximis & minimis, points of inflection and peaks of curves, evolutes, reflection and refraction caustics, &c. as we shall see in this book."

The derivation rules known today are based primarily on the works of Leonhard Euler, who coined the concept of function.

Newton and Leibniz worked with arbitrarily small positive numbers. This was already criticized by contemporaries as illogical, for example by George Berkeley in the polemical writing The analyst; or, a discourse addressed to an infidel mathematician. It was not until the 1960s that Abraham Robinson was able to place this use of infinitesimal quantities on a mathematically-axiomatically secure foundation with the development of non-standard analysis. Despite the prevailing uncertainty, however, the differential calculus was consistently developed, primarily because of its numerous applications in physics and other areas of mathematics. Symptomatic of the time was the prize competition published by the Prussian Academy of Sciences in 1784:

"... Higher geometry frequently uses infinitely large and infinitely small quantities; however, the ancient scholars carefully avoided the infinite, and some famous analysts of our time confess that the words infinite quantity are contradictory. The Academy, therefore, requires that one explain how so many correct propositions have arisen from a contradictory assumption, and that one give a safe and clear fundamental term which is likely to replace the infinite without making the calculation too difficult or too long ..."

It was not until the beginning of the 19th century that Augustin-Louis Cauchy succeeded in giving the differential calculus the logical rigor common today by departing from infinitesimals and defining the derivative as the limit of secant gradients (difference quotients). The definition of the limit value used today was finally formulated by Karl Weierstrass in 1861.

Gottfried Wilhelm Leibniz

Isaac Newton

Derivative calculation

Calculating the derivative of a function is called differentiation; that is, differentiating that function.

To calculate the derivative of elementary functions (e.g.

Derivatives of elementary functions

For the exact calculation of the derivative functions of elementary functions, the difference quotient is formed and calculated in the limit transition

Natural potencies

The case

In general, for a natural number

where the polynomial

because obviously

Exponential function

For any the corresponding exponential function exp a

This is due to the fact that a product of x factors with y factors a consists of x+y factors a in total. From this property it quickly becomes apparent that its derivative must agree with the original function except for one constant factor. Namely it is valid

Accordingly, only the existence of the derivative in

with the natural logarithm

Logarithm

For the logarithm

are used. This arises from the consideration: If u factors of a produce the value x and v factors of a produce the value y, so if

Besides

It is the inverse function of the natural exponential function, and its graph is obtained by mirroring the graph of the function

Sine and cosine

Required for the derivation laws behind sine and cosine are the addition theorems

and the relations

These can all be proved elementary-geometrically on the basis of the definitions of sine and cosine. With it results:

Similarly, one infers

Derivation rules

Derivatives of composite functions, e.g.

The following rules can be used to trace the derivatives of composite functions to derivatives of simpler functions. Let

Constant function

Factor rule

Sum rule

Product rule

Quotient rule

Reciprocal rule

Power rule

Chain rule

Reversal rule

If

If we mirror a point of

Logarithmic derivative

From the chain rule it follows for the derivative of the natural logarithm of a function

A fraction of the form

Derivation of power and exponential functions

To

Other elementary functions

If one has the rules of the calculus at hand, then derivative functions can be determined for many further elementary functions. This concerns especially important concatenations as well as inverse functions to important elementary functions.

General potencies

For any value

In particular, this results in derivation laws for general root functions: For any natural number

![{\displaystyle {\sqrt[{n}]{x}}=x^{\frac {1}{n}}}](https://www.alegsaonline.com/image/87a4448e4847a987eb787a56c26c132147df63c2.svg)

The case

Tangent and cotangent

With the help of the quotient rule, derivatives of tangent and cotangent can also be determined via the derivative rules for sine and cosine. It applies

Pythagorean theorem

Arc sine and arc cosine

Arc sine and arc cosine define inverse functions of sine and cosine. Inside

![[-1,1]](https://www.alegsaonline.com/image/51e3b7f14a6f70e614728c583409a0b9a8b9de01.svg)

Note that the main branch of the arc sine was considered and the derivative at the margins

in the open interval

Arc tangent and arc cotangent

Arc tangent and arc cotangent define inverse functions of tangent and cotangent. In their domain of definition

For the arc cotangent, yields

Both derivative functions, like arc tangent and arc cotangent themselves, are defined everywhere in the real numbers.

Higher derivatives

If the derivative

If the first derivative after time is a velocity, the second derivative can be interpreted as acceleration and the third derivative as jerk.

When politicians comment on the "decrease in the increase in the unemployment rate," they talk about the second derivative (change in the increase) to put the statement of the first derivative (increase in the unemployment rate) into perspective.

Higher derivatives can be written in several ways:

or in the physical case (for a derivative with respect to time)

For the formal denotation of arbitrary derivatives

Higher differential operators

→ Main article: Differentiation class

If is

and thus in general for

Here denotes

because obviously every function which is at least

exemplary examples for functions from

Higher derivation rules

Leibniz's rule

The derivative of

The expressions of the form

Faà di Bruno formula

This formula allows the closed representation of the

Taylor formulas with remainder

→ Main article: Taylor formula

If

with the

and the

with a ξ

However, not every smooth function can be represented by its Taylor series, see below.

Smooth functions

→ Main article: Smooth function

Functions which are differentiable arbitrarily often at any point of their definition space are also called smooth functions. The set of all functions in an open set

where denotes

Analytical functions

→ Main article: Analytic function

The upper notion of smoothness can be further tightened. A function

for all

of a non-analytic smooth function. All real derivatives of this function vanish in 0, but it is not the zero function. Therefore, it is not represented by its Taylor series at the point 0.

Applications

An important application of differential calculus in one variable is the determination of extreme values, usually for the optimization of processes, such as in the context of cost, material or energy expenditure. The differential calculus provides a method to find extreme points without having to search numerically under effort. One makes use of the fact that at a local extreme

A function can have a maximum or minimum value without the derivative existing at this point, but in this case the differential calculus cannot be used. Therefore, in the following, only at least locally differentiable functions will be considered. As an example we take the polynomial function

The figure shows the course of the graphs of

Horizontal tangents

Given a function

Geometric interpretation of this theorem of Fermat is that the graph of the function in local extreme points has a tangent parallel to the

Thus, for differentiable functions, it is a necessary condition for the existence of an extreme point that the derivative at the point in question takes the value 0:

Conversely, however, the fact that the derivative has the value zero at a point does not mean that it is an extreme point; there could also be a saddle point, for example. A list of different sufficient criteria, whose fulfillment lets conclude certainly on an extreme point, can be found in the article extreme value. These criteria mostly use the second or even higher derivatives.

Condition in the example

In the example

It follows that

Since the sequence

consists alternately of small and large values, there must be a high and a low point in this range. According to Fermat's theorem, the curve has a horizontal tangent in these points, so only the points determined above are possible: So is

Curve discussion

→ Main article: Curve discussion

With the help of the derivatives further properties of the function can be analyzed, like the existence of turning and saddle points, the convexity or the monotonicity already mentioned above. The execution of these investigations is the subject of the curve discussion.

Term transformations

Besides the determination of the slope of functions, the differential calculus is by its calculus an essential aid in term transformation. Here one detaches oneself from any connection with the original meaning of the derivative as increase. If one has recognized two terms as equal, further (looked for) identities can be won by differentiation from it. An example may clarify this:

From the known partial sum

of the geometric series shall be the sum

can be calculated. This is done by differentiation with the help of the quotient rule:

Alternatively, the identity is obtained by multiplying out and then triple telescoping, but this is not so easy to see through.

Central statements of the differential calculus of one variable

Fundamental theorem of analysis

→ Main article: Fundamental theorem of calculus

Leibniz's essential achievement was the realization that integration and differentiation are related. He formulated this in the main theorem of differential and integral calculus, also called the fundamental theorem of analysis, which states:

If

continuously differentiable, and its derivative

Herewith a guidance for integrating is given: We are looking for a function

Mean value theorem of the differential calculus

→ Main article: Mean value theorem of differential calculus

Another central theorem of differential calculus is the mean value theorem, which was proved by Cauchy in 1821.

Let ![f\colon [a,b]\to \mathbb {R}](https://www.alegsaonline.com/image/c5ab61178bf5349838758ffe3d96135406ed0245.svg)

![[a,b]](https://www.alegsaonline.com/image/9c4b788fc5c637e26ee98b45f89a5c08c85f7935.svg)

applies - geometrically-illustrative: Between two intersections of a secant there is a point on the curve with a tangent parallel to the secant.

Monotonicity and differentiability

If

- The function

is strictly monotonic.

- It is

with any

- The inverse function

exists, is differentiable and satisfies

.

From this it can be deduced that a continuously differentiable function

However, Hadamard's theorem provides a criterion for showing in some cases that a continuously differentiable function

The rule of de L'Hospital

→ Main article: Rule of de L'Hospital

As an application of the Mean Value Theorem, a relation can be derived that allows in some cases to compute indefinite terms of the form

Let

or

Then applies

if the last limit in

Differential calculus with function sequences and integrals

In many analytical applications one is not dealing with a function

Limit functions

Given a convergent differentiable sequence of functions

From this fact at least the following can be concluded: Let ![{\displaystyle f_{n}\colon [a,b]\to \mathbb {R} }](https://www.alegsaonline.com/image/b32ac944f464748dfb697057d3788497bfabb6fe.svg)

![{\displaystyle f_{n}'\colon [a,b]\to \mathbb {R} }](https://www.alegsaonline.com/image/fa20a73b34f9d8b5ab0dd50731c0f34dcabbb59f.svg)

![{\displaystyle g\colon [a,b]\to \mathbb {R} }](https://www.alegsaonline.com/image/38132af5ea7cd916293fe93f29187bd461a5e270.svg)

![x_{0}\in [a,b]](https://www.alegsaonline.com/image/06636653315ee7c3b5dc9bdb6ac3fb8cccadc145.svg)

![{\displaystyle f_{n}\colon [a,b]\to \mathbb {R} }](https://www.alegsaonline.com/image/b32ac944f464748dfb697057d3788497bfabb6fe.svg)

![f\colon [a,b]\to \mathbb {R}](https://www.alegsaonline.com/image/c5ab61178bf5349838758ffe3d96135406ed0245.svg)

Swap with infinite series

Let ![{\displaystyle f_{n}\colon [a,b]\to \mathbb {R} }](https://www.alegsaonline.com/image/b32ac944f464748dfb697057d3788497bfabb6fe.svg)

![{\displaystyle ||f_{n}'||_{\infty }:=\sup _{x\in [a,b]}|f_{n}'(x)|}](https://www.alegsaonline.com/image/c15371c13ae76eca92cdd6495b526d331581d44c.svg)

![x_{0}\in [a,b]](https://www.alegsaonline.com/image/06636653315ee7c3b5dc9bdb6ac3fb8cccadc145.svg)

The result goes back to Karl Weierstrass.

Swap with integration

Let ![{\displaystyle f\colon [a,b]\times [c,d]\to \mathbb {R} }](https://www.alegsaonline.com/image/7d985fd7a6c6afcc39f2bfa732015f9c55ebf223.svg)

exists and is continuous. Then also

differentiable, and it holds

This rule is also called Leibniz's rule.

Differential calculus over the complex numbers

So far, only real functions have been discussed. However, all treated rules can be transferred to functions with complex inputs and values. This has the background that the complex numbers form a body

Thus, if

exists. This is

In spite of (or just because of) the much more restrictive concept of complex differentiability, all usual calculation rules of the real differential calculus are transferred to the complex differential calculus. This includes the derivation rules, for example the sum, product and chain rule, as well as the inverse rule for inverse functions. Many functions, such as powers, the exponential function or the logarithm, have natural continuations into the complex numbers and continue to possess their characteristic properties. From this point of view, the complex differential calculus is identical to its real analog.

If a function

Differential calculus of multidimensional functions

All previous explanations were based on a function in one variable (i.e. with a real or complex number as argument). Functions which map vectors to vectors or vectors to numbers can also have a derivative. However, a tangent to the function graph in these cases is no longer uniquely determined, since there are many different directions. Here, therefore, an extension of the previous derivation concept is necessary.

Multidimensional differentiability and the Jacobi matrix

Directional derivative

→ Main article: Directional derivative

Let

There is a connection between the directional derivative and the Jacobi matrix. If is

where the notation

As an example, consider a function

Partial derivatives

→ Main article: Partial derivative

The directional derivatives in special directions

In total,

The individual partial derivatives of a function can also be written as a gradient or nabula vector:

Mostly the gradient is written as a row vector (i.e. "lying"). However, in some applications, especially in physics, the notation as column vector (i.e. "standing") is also common. Partial derivatives can themselves be differentiable and their partial derivatives can then be arranged in the so-called Hessian matrix.

Total differentiability

→ Main article: Total differentiability

A function

is valid. For the one-dimensional case this definition agrees with the one given above. The linear mapping

The following relationship exists between the partial derivatives and the total derivative: If the total derivative exists in a point, then all partial derivatives also exist there. In this case the partial derivatives agree with the coefficients of the Jacobi matrix:

Conversely, the existence of partial derivatives at a point

Calculation rules of the multidimensional differential calculus

Chain rule

→ Main article: Multidimensional chain rule

Let

In other words, the Jacobi matrix of the composition

Product rule

See also: Multidimensional product rule

Using the chain rule, the product rule can be generalized to real-valued functions with higher dimensional domain of definition. If

or in gradient notation

Function sequences

Let

converges pointwise to

,

converges locally uniformly to

.

Then is

Implicit differentiation

→ Main article: Implicit differentiation

If a function

For the derivative of the function

where

Central theorems of the differential calculus of several variables

Black set

→ Main article: Black's theorem

The differentiation order is irrelevant for the calculation of partial derivatives of higher order, if all partial derivatives up to this order (inclusive) are continuous. This means concretely: If

The theorem becomes false if the continuity of the twofold partial derivatives is omitted.

Theorem of the implicit function

→ Main article: Theorem of the implicit function

The implicit function theorem states that functional equations are solvable if the Jacobian matrix is locally invertible with respect to certain variables.

Mean value set

Via the higher dimensional mean value theorem it is possible to estimate a function along a connecting line if the derivatives there are known. Let

However, a more precise statement is possible for the case of real-valued functions in several variables, see also Mean Value Theorem for Real-Valued Functions of Several Variables.

Higher derivatives in the multidimensional

Higher derivatives can also be considered in the case of higher dimensional functions. However, the concepts have some strong differences to the classical case, which become apparent especially in the case of several variables. Already the Jacobi matrix lets recognize that the derivative of a higher dimensional function at a point does not have to have the same shape as the function value there. If now the first derivative

where

Also the concepts of Taylor formulas and Taylor series can be generalized to the higher dimensional case, see also Taylor formula in the multidimensional or multidimensional Taylor series.

Applications

Error calculation

An application example of the differential calculus of several variables concerns the error calculation, for example in the context of experimental physics. While in the simplest case the quantity to be determined can be measured directly, it will usually be the case that it results from a functional relationship of quantities that are easier to measure. Typically, every measurement has a certain uncertainty, which one tries to quantify by specifying the measurement error.

For example, denotes

In general, if a quantity to be measured is functionally related to individually measured quantities

will lie. Here, the vector

Solution approximation of equation systems

Many higher systems of equations cannot be solved algebraically closed. In some cases, however, one can at least determine an approximate solution. If the system is

under certain conditions against a zero. Here

Extreme value tasks

Also for the curve discussion of functions

for all

Optimization under constraints

Often in optimization problems, the objective function

Example from microeconomics

In microeconomics, for example, different types of production functions are analyzed in order to gain insights for macroeconomic relationships. Here, the typical behavior of a production function is of particular interest: How does the dependent variable output

One basic type of production function is the neoclassical production function. It is characterized, among other things, by the fact that output increases with each additional input, but that the increases are decreasing. For example, let the Cobb-Douglas function for an economy be

is decisive. At any point in time,

Since the partial derivatives can

They will be negative for all inputs, so growth rates fall. Thus, one could say that as inputs increase, output increases less than proportionally. The relative change in output with respect to a relative change in input is

Advanced theories

Differential equations

→ Main article: Differential equation

An important application of differential calculus is in the mathematical modeling of physical processes. Growth, motion, or forces all involve derivatives, so their formulaic description must include differentials. Typically, this leads to equations in which derivatives of an unknown function appear, so-called differential equations.

For example, the Newtonian law of motion links

the acceleration

Since many models are multidimensional, the partial derivatives explained above are often very important in the formulation, with which partial differential equations can be formulated. Mathematically compact, these are described and analyzed by means of differential operators.

Differential Geometry

→ Main article: Differential geometry

The central theme of differential geometry is the extension of classical analysis to higher geometric objects. These look locally like, for example, the Euclidean space

Both the theoretical results and methods of differential geometry have significant applications in physics. For example, Albert Einstein described his theory of relativity in differential geometric terms.

Generalizations

In many applications it is desirable to be able to form derivatives also for continuous or even discontinuous functions. For example, a wave breaking on a beach can be modeled by a partial differential equation, but the function of the height of the wave is not even continuous. For this purpose, in the middle of the 20th century, the notion of derivative was generalized to the space of distributions and a weak derivative was defined there. Closely connected with this is the notion of Sobolev space.

The notion of derivative as linearization can be applied analogously to functions

A transfer of the notion of derivative to rings other than

Questions and Answers

Q: What is differential calculus?

A: Differential calculus is a branch of calculus that studies the rate of change of a variable compared to another variable, by using functions.

Q: How does it work?

A: Differential calculus allows us to find out how a shape changes from one point to the next without needing to divide the shape into an infinite number of pieces.

Q: Who developed differential calculus?

A: Differential calculus was developed in the 1670s and 1680s by Sir Isaac Newton and Gottfried Leibniz.

Q: What is integral calculus?

A: Integral calculus is the opposite of differential calculus. It is used for finding areas under curves and volumes of solids with curved surfaces.

Q: When was differential calculus developed?

A: Differential calculus was developed in the 1670s and 1680s by Sir Isaac Newton and Gottfried Leibniz.

Q: What are some applications of differential calculus?

A: Some applications of differential calculus include calculating velocity, acceleration, maximum or minimum values, optimization problems, slope fields, etc.

Q: Why do we use differential calculus instead of dividing shapes into an infinite number of pieces?

A: We use differential calculus instead because it allows us to find out how a shape changes from one point to the next without needing to divide the shape into an infinite number of pieces.

Search within the encyclopedia

![{\displaystyle ({\sqrt[{n}]{x}})'=\left(x^{\frac {1}{n}}\right)'={\frac {1}{n}}x^{{\frac {1}{n}}-1}={\frac {1}{nx^{1-{\frac {1}{n}}}}}={\frac {\sqrt[{n}]{x}}{nx}}.}](https://www.alegsaonline.com/image/2cc95fcfa4db9d0ccb69764bc5f41574a3c85d31.svg)