Determinant

![]()

The title of this article is ambiguous. For other meanings, see Determinant (disambiguation).

In linear algebra, the determinant is a number (a scalar) that is assigned to a square matrix and can be calculated from its entries. It indicates how the volume changes in the linear mapping described by the matrix and is a useful tool in solving systems of linear equations. More generally, one can assign a determinant to each linear self-mapping (endomorphism). Common notations for the determinant of a square matrix

For example, the determinant of a

with the formula

be calculated.

With the help of determinants, for example, one can determine whether a linear system of equations is uniquely solvable and can explicitly state the solution with the help of Cramer's rule. The system of equations is uniquely solvable exactly when the determinant of the coefficient matrix is not equal to zero. Accordingly, a square matrix with entries from a body is exactly invertible if its determinant is not equal to zero.

If one writes

If the linear mapping

If the linear mapping

The concept of determinant is of interest for

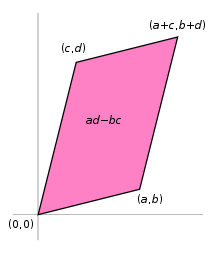

The 2x2 determinant is equal to the oriented area of the parallelogram spanned by its column vectors

Definition

There are several ways to define the determinant (see below). The most common is the following recursive definition.

Development of the determinant according to a column or row:

For n=2:

For n=3: Development according to the 1st column

Correspondingly for n=4,....

Laplace's development theorem (see below) says:

- You may develop a determinant according to any column or row, as long as you keep to the chessboard sign pattern:

Formally, it can be written like this:

where

Example:

Properties (summary, see below)

for unit matrix

where is

the transposed matrix of

- For square matrices

and

same size, the determinant multiplication theorem applies:

for an

matrix

and a number

.

- For a triangular matrix

- If a row or column consists of zeros, the determinant is 0.

- If two columns (rows) are equal, the determinant is 0.

- If you swap two columns (rows), a determinant changes its sign.

- If

the column vectors (row vectors) of a matrix and

is a number, then the following applies:

a1)

a2)

correspondingly for the other column vectors (row vectors).

b)

- Addition of a multiple of one column (row) to another column (row) does not change a determinant. One can therefore transform a determinant into a triangular determinant with a weakened Gauss algorithm and use property 6. Note property 9. and 10.a2).

- Only for

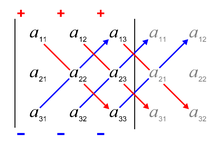

-determinants Sarrus' rule applies:

Example, application of rules 11, 10, 8:

Rule of Sarrus

Axiomatic description

A mapping

- It is multilinear, i.e. linear in each column:

For all

For all

- It is alternating, i.e. if two columns contain the same argument, the determinant is equal to 0:

For all

It follows that the sign changes when two columns are swapped:

For all

This inference is often used to define alternating. In general, however, it is not equivalent to the above. If alternating is defined in the second way, there is no unique determinant form if the body over which the vector space is formed has an element

- It is normalised, i.e. the unit matrix has the determinant 1:

It can be proved (and Karl Weierstrass did so in 1864 or even earlier) that there is one and only one such normalised alternating multilinear form on the algebra of

Leibniz formula

For an

The sum is calculated over all permutations σ

This formula contains

An alternative notation of the Leibniz formula uses the Levi-Civita symbol and Einstein's summation convention:

Determinant of an endomorphism

Since similar matrices have the same determinant, the definition of the determinant of square matrices can be transferred to the linear self-mappings (endomorphisms) represented by these matrices:

The determinant

Here

The definition can be formulated without using matrices as follows: Let ω be

It is

An alternative definition is the following: Let be

is fixed. (This mapping Λ

Since the vector space Λ

Further possibilities for calculation

Spat product

If a

Gaussian elimination method for determinant calculation

In general, determinants can be calculated with the Gaussian elimination method using the following rules:

- If

a triangular matrix, then the product of the main diagonal elements is the determinant of

.

- If

results from by swapping two rows or columns, then

.

- If

results from by adding a multiple of one row or column to another row or column, then

.

- If

results from

forming the

a row or column, then

.

Starting with any square matrix, use the last three of these four rules to transform the matrix into an upper triangular matrix, and then calculate the determinant as the product of the diagonal elements.

The determinant calculation by means of the LR decomposition is also based on this principle. Since both

Laplacian development theorem

With Laplace's development theorem, one can "develop" the determinant of an

where

Strictly speaking, the development theorem only gives a procedure for calculating the summands of the Leibniz formula in a certain order. In the process, the determinant is reduced by one dimension with each application. If desired, the procedure can be applied until a scalar results (see above).

Laplace's development theorem can be generalised in the following way. Instead of developing by only one row or column, one can also develop by several rows or columns. The formula for this is

with the following designations:

Efficiency:

The computational cost of Laplace's evolution theorem for a matrix of dimension

Other properties

Determinant product theorem

The determinant is a multiplicative mapping in the sense that

for all

This means that the mapping

More generally, the Binet-Cauchy theorem applies to the determinant of a square matrix that is the product of two (not necessarily square) matrices. Even more generally, a formula for the calculation of a minor of order

The case

Existence of the inverse matrix

→ Main article: Regular matrix

matrix A

Similar matrices

→ Main article: Similarity (matrix)

If

Therefore, independently of a coordinate representation, one can

There are matrices that have the same determinant but are not similar.

Block matrices

For the determinant of a

with square blocks

This formula is also called a box set.

If is

the formula

If

In the special case that all four blocks have the same size and commutate in pairs, this results in the following with the help of the determinant product theorem

Let

Eigenvalues and characteristic polynomial

Is the characteristic polynomial of the

then

Decomposes the characteristic polynomial into linear factors (with not necessarily different α

so in particular

If λ

Continuity and differentiability

The determinant of real square matrices of fixed dimension

where

or as an approximation formula

if the values of the matrix

Permanent

→ Main article: Permanent

The permanent is an "unsigned" analogue of the determinant, but is used much less frequently.

Generalisation

The determinant can also be defined on matrices with entries in a commutative ring with one. This is done with the help of a certain antisymmetric multilinear mapping: If

the uniquely determined mapping with the following properties:

is

-linear in each of the

arguments.

is antisymmetric, i.e. if two of the

arguments are equal, returns

zero.

where

is the element of

having a 1 as

-th coordinate and zeros otherwise.

A mapping with the first two properties is also called a determinant function, volume or alternating

Special determinants

- Vronsky determinant

- Pfaff determinant

- Vandermonde determinant

- Gram's determinant

- Functional determinant (also called Jacobi determinant)

- Determinant (knot theory)

History

Historically, determinants (Latin determinare "to delimit", "to determine") and matrices are very closely related, which is still the case according to our understanding today. However, the term matrix was only coined more than 200 years after the first thoughts on determinants. Originally, a determinant was considered in connection with systems of linear equations. The determinant "determines" whether the system of equations has a unique solution (this is precisely the case when the determinant is not equal to zero). The first considerations of this kind for

In the 18th century, determinants became an integral part of the technique for solving systems of linear equations. In connection with his studies on intersections of two algebraic curves, Gabriel Cramer calculated the coefficients of a general conic section

which runs through five given points and established Cramer's rule, which is named after him today. For systems of equations with up to four unknowns, this formula was already used by Colin Maclaurin.

Several well-known mathematicians such as Étienne Bézout, Leonhard Euler, Joseph-Louis Lagrange and Pierre-Simon Laplace were now primarily concerned with the calculation of determinants. An important advance in theory was achieved by Alexandre-Théophile Vandermonde in a work on elimination theory, completed in 1771 and published in 1776. In it, he formulated some fundamental statements about determinants and is therefore considered a founder of the theory of determinants. These results included, for example, the statement that an even number of permutations of two adjacent columns or rows does not change the sign of the determinant, whereas an odd number of permutations of adjacent columns or rows changes the sign of the determinant.

During his investigations of binary and ternary quadratic forms, Gauss used the schematic notation of a matrix without calling this number field a matrix. In the process, he defined today's matrix multiplication as a by-product of his investigations and showed the determinant product theorem for certain special cases. Augustin-Louis Cauchy further systematised the theory of the determinant. For example, he introduced the conjugate elements and clearly distinguished between the individual elements of the determinant and between the subdeterminants of different orders. He also formulated and proved theorems about determinants such as the determinant product theorem or its generalisation, Binet-Cauchy's formula. In addition, he made a significant contribution to the term "determinant" becoming established for this figure. Therefore, Augustin-Louis Cauchy can also be considered the founder of the theory of the determinant.

The axiomatic treatment of the determinant as a function of

Questions and Answers

Q: What is a determinant?

A: A determinant is a scalar (a number) that indicates how a square matrix behaves.

Q: How can the determinant of a matrix be calculated?

A: The determinant of the matrix can be calculated from the numbers in the matrix.

Q: How is the determinant of a matrix written?

A: The determinant of a matrix is written as det(A) or |A| in a formula.

Q: Are there other ways to write out the determinant of a matrix?

A: Yes, instead of det([a b c d]) and |[a b c d]|, one can simply write det [a b c d] and |[a b c d]|.

Q: What does it mean when we say "scalar"?

A: A scalar is an individual number or quantity that has magnitude but no direction associated with it.

Q: What are square matrices?

A: Square matrices are matrices with an equal number of rows and columns, such as 2x2 or 3x3 matrices.

Search within the encyclopedia