Database normalization

![]()

This article or section is not written in a generally understandable way. The shortcomings are described in Discussion:Normalization (database). If you remove this module, please justify it on the article discussion page and add {{Completed|1=~~~~}} to the automatically created project page section Wikipedia:Incomprehensible articles #Normalization (database).

Normalization of a relational data schema (table structure) means the division of attributes (table columns) into several relations (tables) according to the normalization rules (see below), so that a form is created which no longer contains redundancies.

A conceptual schema that contains data redundancies can lead to the fact that when changes are made to the database implemented with it, the data contained multiple times are not changed consistently, but only partially and incompletely, which can make them obsolete or contradictory. This is referred to as occurring anomalies. In addition, multiple storage of the same data unnecessarily occupies storage space. To prevent redundancy, such tables are normalized.

There are various degrees to which a database schema can be immune to anomalies. Depending on the degree of anomaly, a database schema is said to be in first, second, third, and so on. normal form. These normal forms are defined by certain formal requirements for the schema.

A relational data schema is brought into a normal form by progressively decomposing its relations into simpler ones on the basis of functional dependencies that apply to them, until no further decomposition is possible. However, data must not be lost in the process. Delobel's theorem can be used to formally prove that a decomposition step does not involve any loss of data.

Normalization is mainly used in the design phase of a relational database. There are algorithms for normalization (synthesis algorithm (3NF), decomposition algorithm (BCNF), etc.) that can be automated.

The decomposition methodology follows relational design theory.

Proceedings

Goal: Consistency increase through redundancy avoidance

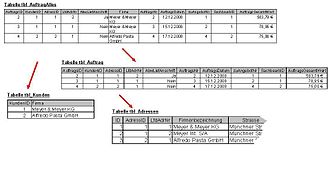

When normalizing in these areas, columns (synonymous terms: fields, attributes) of tables within these areas (the data schemas) are first split into new columns, e.g. addresses into postal code, city and street. Tables are then split, for example a table

tbl_AddressesAll with the fields Company, Street, Postal Code and City into these tables:

- tbl_Addresses with the fields AddressID, Company, Street and Postcode

- tbl_PLZOrt with the fields postcode and city

See image Splitting the table tbl_AddressesAll - where the table tbl_Addresses still gets the unique primary key AddressID.

Note: In this example it is assumed that there is only one place name for each postal code, but this is very often not the case in Germany - e.g. in rural areas, where sometimes up to 100 places "share" one postal code.

The purpose of normalisation is to reduce redundancies (multiple recording of the same facts) and thus prevent anomalies (e.g. due to changes in not all places), in order to simplify the updating of a database (changes only in one place) and to ensure the consistency of the data.

Example

An example of this: A database contains customers and their addresses as well as orders assigned to the customers. Since there can be several orders from the same customer, entering the customer data (possibly with address data) in the order table would result in it appearing there several times, although the customer always has only one set of valid data (redundancy). For example, it can happen that incorrect address data is entered for the customer in one order, and the correct data is entered in the next order. This can lead to contradictory data - in this table or in relation to other tables. The data would then not be consistent, you would not know which data is correct. Possibly even both addresses are incorrect because the customer has moved (solution see below).

In a normalized database, there is only one entry for the customer data in the customer table to which each order of this customer is linked (usually via the customer number). In the case of the relocation of a customer (another example is the change of VAT), there would be several entries in the corresponding table, but they are additionally distinguishable by the specification of a validity period and can be uniquely addressed in the above customer example via the combination order date/customer number.

Another advantage of freedom from redundancy, which still plays an important role today with millions of data records in a database, is the lower memory requirement if the data record of a table, for example tbl_order, refers to a data record of another table, for example tbl_customer, instead of containing this data itself.

These are the recommendations that are made on the basis of the theory of normalization in database development in order to ensure, above all, consistency of the data and an unambiguous selection of data. However, the freedom from redundancy sought for this purpose competes with processing speed or other goals in special use cases. It may therefore make sense to dispense with normalization or to reverse it by means of denormalization, in order to

- increase the processing speed (performance) or

- simplify queries and thus reduce the susceptibility to errors or

- Map specific features of processes (for example, business processes).

In these cases, automatic reconciliation routines should be implemented regularly to avoid inconsistencies. Alternatively, the data in question can also be locked for changes.

.svg.png)

Splitting the table TBL_AddressesAll

Splitting tables for normalization

Comments

Weaknesses in the data model due to a lack of normalization can - in addition to the typical anomalies - mean a higher effort in a later further development. On the other hand, normalization steps can be deliberately omitted during database design for performance reasons (denormalization). A typical example of this is the star schema in the data warehouse.

The creation of a normalized schema is supported by automatic derivation from a conceptual data model; in practice, an extended entity-relationship model (ERM) or a Unified Modeling Language (UML) class diagram serves as a starting point for this. The relation schema derived from the conceptual design can then be checked using the normalizations; however, formalisms and algorithms exist that can already ensure this property.

Instead of the original ER model developed by Peter Chen in 1976, extended ER models are used today: The Structured ERM (SERM), the E3R model, the EER model, and the SAP SERM used by SAP AG.

If a relation schema is not in the 1NF, this form is also called non-first normal form (NF²) or un-normalized form (UNF).

The process of normalizing and decomposing a relation into the 1NF, 2NF, and 3NF must preserve the recoverability of the original relation, that is, the decomposition must be true to the compound and true to the dependency.

Questions and Answers

Q: What is database normalisation?

A: Database normalisation is an approach to designing databases which was introduced by Edgar F. Codd in the 1970s. It involves breaking data into separate groups, known as tables, and establishing relationships between them to provide useful information.

Q: What is a flat file database?

A: A flat file database is where all of the data is grouped together like in a spreadsheet. This can lead to a lot of blank spaces and repeated information, making it more likely that mistakes will occur.

Q: How does relational databases reduce the chance of mistakes happening?

A: Relational databases break the data into groups, reducing the chance of mistakes happening and not taking up any more space than necessary.

Q: What are normal forms?

A: Normal forms are criteria that different databases must meet in order for them to be well designed relational databases. There are several "normal forms", each with their own set of rules which the database should be designed to meet.

Q: What are some drawbacks of meeting certain sets of criteria for normal forms?

A: The drawback of meeting such a set of criteria is usually that querying certain data from the database will become more difficult.

Search within the encyclopedia