Computer science

·

·

·

·

Computer science is the science of the systematic representation, storage, processing and transmission of information, with particular emphasis on automatic processing with digital computers. It is both a basic and formal science as well as an engineering discipline.

Disciplines of computer science

Computer science is divided into the subfields of theoretical computer science, practical computer science and technical computer science.

The applications of computer science in the various areas of everyday life as well as in other disciplines, such as business informatics, geoinformatics and medical informatics, come under the heading of applied computer science. The impact on society is also studied in an interdisciplinary manner.

Theoretical computer science forms the theoretical basis for the other subfields. It provides fundamental insights for the decidability of problems, for the classification of their complexity, and for the modeling of automata and formal languages.

Disciplines of practical and technical computer science are based on these findings. They deal with central problems of information processing and search for applicable solutions.

The results are finally used in applied computer science. Hardware and software realizations are attributed to this field and thus a large part of the commercial IT market. In addition, the interdisciplinary subjects investigate how information technology can solve problems in other scientific fields, such as the development of geodatabases for geography, but also business or bioinformatics.

Theoretical Computer Science

→ Main article: Theoretical computer science

As the backbone of computer science, the field of theoretical computer science deals with the abstract and mathematics-oriented aspects of the science. The field is broad and deals, among other things, with topics from theoretical linguistics (theory of formal languages or automata theory), computability theory and complexity theory. The goal of these subfields is to comprehensively answer fundamental questions such as "What can be computed?" and "How effectively/efficiently can something be computed?".

·

Automata Theory

· ![]()

Computability theory

·

Complexity theory

·

Quantum Computer

Automata theory and formal languages

→ Main article: Automata theory and formal language

In computer science, automata are "imaginary machines" that behave according to certain rules. A finite state machine has a finite set of internal states. It reads an "input word" one character at a time and performs a state transition at each character. In addition, it can output an "output symbol" at each state transition. At the end of the input, the automaton can accept or reject the input word.

The approach of formal languages has its origin in linguistics and is therefore well suited for the description of programming languages. However, formal languages can also be described by automata models, since the set of all words accepted by an automaton can be considered a formal language.

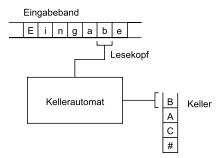

More complicated models have a memory, for example basement automata or the Turing machine, which according to the Church-Turing thesis can reproduce all functions computable by humans.

Computability theory

→ Main article: Computability theory

In the context of computability theory, theoretical computer science investigates which problems can be solved with which machines. A computer model or a programming language is called Turing-complete if a universal Turing machine can be simulated with it. All computers used today and most programming languages are Turing-complete, which means that the same tasks can be solved with them. Alternative computational models such as the lambda calculus, WHILE programs, μ-recursive functions or register machines also turned out to be Turing-complete. From these findings, the Church-Turing thesis developed, which, although not formally provable, is generally accepted.

The notion of decidability can be illustrated as the question whether a certain problem is algorithmically solvable. A decidable problem is, for example, the property of a text to be a syntactically correct program. A non-decidable problem is, for example, the question whether a given program with given input parameters will ever reach a result, which is called a halting problem.

Complexity theory

→ Main article: Complexity theory

Complexity theory deals with the resource requirements of algorithmically treatable problems on various mathematically defined formal computer models, as well as the quality of the algorithms solving them. In particular, the resources "runtime" and "memory" are studied and their demand is usually represented in the Landau notation. Primarily, the runtime and memory requirements are notated as a function of the length of the input. Algorithms that differ by at most a constant factor in their runtime or memory requirements are assigned by Landau notation to the same class, i.e., a set of problems with equivalent runtime required by the algorithm to solve them.

An algorithm whose running time is independent of the input length operates "in constant time", write

Complexity theory so far provides almost only upper bounds on the resource requirements of problems, because methods for exact lower bounds are hardly developed and known only from a few problems (for example, for the task of sorting a list of values using a given order relation by comparisons, the lower bound Ω

The biggest open question in complexity theory is the question of "P = NP?". The problem is one of the Millennium Problems, for which the Clay Mathematics Institute is offering a $1 million prize. If P does not equal NP, then NP-complete problems cannot be solved efficiently.

Theory of programming languages

This area deals with the theory, analysis, characterization, and implementation of programming languages and is actively researched in both practical and theoretical computer science. The subfield strongly influences related disciplines such as parts of mathematics and linguistics.

·

Type Theory

·

Compiler Construction

·

Programming languages

Theory of formal methods

Formal methods theory deals with a variety of techniques for formal specification and verification of software and hardware systems. The motivation for this field stems from engineering thinking - rigorous mathematical analysis helps to improve the reliability and robustness of a system. These properties are particularly important for systems operating in safety-critical domains. Research into such methods requires knowledge from mathematical logic and formal semantics, among other things.

Practical computer science

→ Main article: Practical computer science

Practical computer science develops fundamental concepts and methods for solving concrete problems in the real world, for example the management of data in data structures or the development of software. The development of algorithms plays an important role here. Examples are sorting and search algorithms.

One of the central topics of practical computer science is software engineering (also called software engineering). It deals with the systematic creation of software. Concepts and proposed solutions for large software projects are also developed, which should allow a repeatable process from the idea to the finished software.

| C source code | Machine code (schematic) | |

| /** * Calculate the ggT of two numbers * using the Euclidean algorithm */ int ggt(int number1, int number2) { int temp; while(number2 != 0) { temp = number1%number2; number1 = number2; number2 = temp; } return number1; } | → compiler → | … 0010 0100 1011 0111 1000 1110 1100 1011 0101 1001 0010 0001 0111 0010 0011 1101 0001 0000 1001 0100 1000 1001 1011 1110 0001 0011 0101 1001 0111 0010 0011 1101 0001 0011 1001 1100 … |

An important topic in Practical Computer Science is compiler construction, which is also studied in Theoretical Computer Science. A compiler is a program that translates other programs from a source language (for example, Java or C++) into a target language. A compiler allows a human to develop software in a more abstract language than the machine language used by the CPU.

An example of the use of data structures is the B-tree, which allows fast searching in large data sets in databases and file systems.

Computer engineering

→ Main article: Computer engineering

Computer Engineering deals with the hardware-related fundamentals of computer science, such as microprocessor technology, computer architecture, embedded and real-time systems, computer networks including the associated system-related software, as well as the modelling and evaluation methods developed for this purpose.

Microprocessor technology, computer design process

Microprocessor technology is dominated by the rapid development of semiconductor technology. The structure widths in the nanometer range enable the miniaturization of highly complex circuits with several billion individual components. This complexity can only be mastered with sophisticated design tools and powerful hardware description languages. The path from the idea to the finished product leads through many stages, which are largely computer-aided and ensure a high degree of accuracy and freedom from errors. If hardware and software are designed together due to high performance requirements, this is also referred to as hardware-software codesign.

Architectures

Computer architecture or system architecture is the field that researches concepts for the construction of computers or systems. In computer architecture, for example, the interaction of processors, main memory and control units (controllers) and peripherals is defined and improved. The research area is oriented both to the requirements of the software and to the possibilities that arise through the further development of integrated circuits. One approach is reconfigurable hardware such as FPGAs (Field Programmable Gate Arrays), whose circuit structure can be adapted to the respective requirements.

Based on the architecture of the sequentially operating Von Neumann machine, today's computers usually consist of a processor, which itself can contain several processor cores, memory controllers and a whole hierarchy of cache memories, a random access memory (RAM) and input/output interfaces to secondary storage (e.g. hard disk or SSD memory). Due to the many fields of application, a wide spectrum of processors is in use today, ranging from simple microcontrollers, e.g. in household appliances, to particularly energy-efficient processors in mobile devices such as smartphones or tablet computers, to internally parallel high-performance processors in personal computers and servers. Parallel computers are gaining in importance, in which computing operations can be carried out on several processors simultaneously. The progress of chip technology today already enables the realization of a large number (current order of magnitude 100...1000) of processor cores on a single chip (multi-core processors, multi/manycore systems, "System-on-a-Chip" (SoCs)).

If the computer is integrated into a technical system and performs tasks such as control, regulation or monitoring that are largely invisible to the user, this is referred to as an embedded system. Embedded systems are used in a variety of everyday devices such as household appliances, vehicles, consumer electronics devices, mobile phones, but also in industrial systems, e.g. in process automation or medical technology. Since embedded computers are always and everywhere available, one also speaks of ubiquitous computing. Increasingly, these systems are networked, e.g. with the Internet ("Internet of Things"). Networks of interacting elements with physical input from and output to their environment are also called Cyber-Physical Systems. One example is wireless sensor networks for environmental monitoring.

Real-time systems are designed to be able to respond in time to certain time-critical processes of the external world with an appropriate reaction speed. This requires that the execution time of the response processes is guaranteed not to exceed corresponding predefined time barriers. Many embedded systems are also real-time systems.

Computer communication plays a central role in all multi-computer systems. This enables the electronic exchange of data between computers and thus represents the technical basis of the Internet. In addition to the development of routers, switches or firewalls, this also includes the development of the software components required to operate these devices. This includes in particular the definition and standardization of network protocols such as TCP, HTTP or SOAP. Protocols are the languages in which computers exchange information with each other.

In distributed systems, a large number of processors work together without shared memory. Usually, processes that communicate with each other via messages regulate the cooperation of individual, largely independent computers in a network (cluster). Keywords in this context are, for example, middleware, grid computing and cloud computing.

Modelling and evaluation

As a basis for the evaluation of the aforementioned architectural approaches, special modeling methods have been developed - due to the general complexity of such system solutions - in order to be able to perform evaluations even before the actual system realization. Particularly important is, on the one hand, the modeling and evaluation of the resulting system performance, e.g., by means of benchmark programs. As methods for performance modeling, e.g. queueing models, Petri nets and special traffic theoretical models have been developed. In many cases, computer simulation is also used, especially in processor development.

In addition to performance, other system properties can also be studied on the basis of modeling; for example, the energy consumption of computer components is currently also playing an increasingly important role to be taken into account. In view of the growth of hardware and software complexity, problems of reliability, fault diagnosis and fault tolerance, especially in safety-critical applications, are also of great importance. Here, there are corresponding solution methods, mostly based on the use of redundant hardware or software elements.

Relationships to other computer science fields and other disciplines

Computer engineering has close links to other areas of computer science and engineering. It builds on electronics and circuitry, with digital circuits in the foreground (digital technology). For the higher software layers, it provides the interfaces on which these layers in turn build. Particularly via embedded systems and real-time systems, there are close links to adjacent areas of electrical engineering and mechanical engineering such as control, regulation and automation technology as well as robotics.

Computer science in interdisciplinary sciences

Under the collective term of applied computer science "one summarizes the application of methods of core computer science in other sciences ...". A number of interdisciplinary subfields and research approaches have developed around computer science, some of which have become sciences in their own right. Examples:

Computational sciences

This interdisciplinary field deals with the computer-aided analysis, modelling and simulation of scientific problems and processes. A distinction is made here according to the natural sciences:

- Bioinformatics (also computational biology) is concerned with the informatics principles and applications of the storage, organization and analysis of biological data. The first pure bioinformatics applications were developed for DNA sequence analysis. This primarily involves the rapid detection of patterns in long DNA sequences and the solution of the problem of how to superimpose two or more similar sequences and align them against each other in such a way that the best possible match is achieved (sequence alignment). With the elucidation and far-reaching functional analysis of various complete genomes (e.g. the nematode Caenorhabditis elegans), the focus of bioinformatics work is shifting to questions of proteomics, such as the problem of protein folding and structure prediction, i.e. the question of secondary or tertiary structure for a given amino acid sequence.

- Biodiversity informatics involves the storage and processing of biodiversity information. While bioinformatics deals with nucleic acids and proteins, the objects of biodiversity informatics are taxa, biological collection evidence and observational data.

- Artificial life was established as an interdisciplinary research discipline in 1986. The simulation of natural life forms with software (soft artificial life) and hardware methods (hard artificial life) is one of the main goals of this discipline. Applications for artificial life today include synthetic biology, the health sector and medicine, ecology, autonomous robots, the transport and traffic sector, computer graphics, virtual societies and computer games.

- Chemoinformatics (chemoinformatics or chemiinformatics) is a branch of science that combines the field of chemistry with computer science methods and vice versa. It deals with the search in chemical space, which consists of virtual (in silico) or real molecules. The size of the chemical space is estimated to be about 1062 molecules and is far larger than the amount of real molecules synthesized so far. Thus, under certain circumstances, millions of molecules can be tested in silico with the help of such computer methods, without having to generate them explicitly by means of combinatorial chemistry methods or synthesis in the laboratory.

Engineering informatics, mechanical engineering informatics

Engineering informatics, also known as computational engineering science, is an interdisciplinary teaching at the interface between engineering sciences, mathematics and computer science at the departments of electrical engineering, mechanical engineering, process engineering, systems engineering.

The core of mechanical engineering informatics includes virtual product development (production informatics) by means of computer visualistics, as well as automation technology.

Business informatics, information management

→ Main article: Information Systems

Business information systems (also management information systems) is an "interface discipline" between computer science and economics, especially business administration. Due to its interfaces, it has developed into an independent science and can be studied at both business and computer science faculties. One focus of business information systems is the mapping of business processes and accounting in relational database systems and enterprise resource planning systems. Information engineering of information systems and information management play a weighty role in the context of business information systems. This was developed at the Furtwangen University of Applied Sciences as early as 1971. From 1974 onwards, the then TH Darmstadt, the Johannes Kepler University Linz and the Vienna University of Technology set up a course of study in Business Information Systems.

Socioinformatics

Socioinformatics is concerned with the effects of IT systems on society, e.g. how they support organizations and society in their organization, but also how society influences the development of socially embedded IT systems, be it as prosumers on collaborative platforms such as Wikipedia, or by means of legal restrictions to guarantee data security, for example.

Social Informatics

Social informatics is concerned on the one hand with IT operations in social organisations, and on the other hand with technology and informatics as an instrument of social work, such as Ambient Assisted Living.

Media Informatics

Media informatics focuses on the interface between man and machine and deals with the combination of computer science, psychology, work science, media technology, media design and didactics.

Computational Linguistics

Computational linguistics investigates how natural language can be processed by computers. It is a subfield of artificial intelligence, but also an interface between applied linguistics and applied computer science. Related to this is the term cognitive science, which is a separate interdisciplinary branch of science that combines linguistics, computer science, philosophy, psychology and neurology, among others. Application areas of computational linguistics are speech recognition and synthesis, automatic translation into other languages and information extraction from texts.

Environmental informatics, geoinformatics

Environmental informatics deals with the interdisciplinary analysis and evaluation of environmental issues using computer science. The focus is on the use of simulation programs, geographic information systems(GIS) and database systems. Geoinformatics is the study of the nature and function of geoinformation and its provision in the form of geodata and the applications based on it

. It forms the scientific basis for geoinformation systems (GIS). Common to all applications of geoinformatics is the spatial reference and, in some cases, its mapping into Cartesian spatial or planar representations in the reference system.

Other computer science disciplines

Further interfaces of computer science with other disciplines exist in information management, medical computer science, logistics computer science, nursing computer science and legal computer science, information management (administrative computer science, business computer science), architectural computer science (construction computer science) as well as agricultural computer science, archaeoinformatics, sports computer science, as well as new interdisciplinary directions such as neuromorphic engineering. Collaboration with mathematics or electrical engineering is not called interdisciplinary because of their relatedness. Didactics of computer science is concerned with computer science teaching, especially in schools. Elementary computer science deals with the teaching of basic computer science concepts in preschool and elementary school.

Artificial intelligence

→ Main article: Artificial intelligence

Artificial intelligence (AI) is a large subfield of computer science with strong influences from logic, linguistics, neurophysiology and cognitive psychology. At the same time, the methodology of AI differs in part considerably from classical computer science. Instead of providing a complete description of the solution, artificial intelligence leaves the solution finding to the computer itself. Its methods are used in expert systems, sensor technology and robotics.

The understanding of the term "artificial intelligence" often reflects the Enlightenment notion of man as a machine, which the so-called "strong AI" aims to imitate: to create an intelligence that can think and solve problems like humans and that is characterized by a form of consciousness or self-awareness as well as emotions.

This approach has been implemented through expert systems, which essentially perform the collection, management and application of a large number of rules on a given subject (hence "experts").

In contrast to strong AI, "weak AI" is concerned with mastering concrete application problems. Of particular interest are those applications for whose solution, according to general understanding, some form of "intelligence" seems to be necessary. Ultimately, weak AI is thus concerned with simulating intelligent behaviour using the tools of mathematics and computer science; it is not concerned with creating consciousness or with a deeper understanding of intelligence. An example from weak AI is fuzzy logic.

Neural networks also belong to this category - since the early 1980s, the information architecture of the (human or animal) brain has been analysed under this term. Modeling in the form of artificialneural networks illustrates how complex pattern recognition can be performed from a very simple basic structure. At the same time, it becomes clear that this kind of learning is not based on the derivation of logically or linguistically formulable rules - and thus, for example, the special abilities of the human brain within the animal kingdom are not reducible to a rule- or language-based "intelligence" concept. The implications of these insights for AI research, but also for learning theory, didactics, and other fields are still under discussion.

·

Machine learning

·

Image processing

·

Pattern Recognition

·

Cognitive Science

·

Data Mining

·

Evolutionary computation

·

information retrieval

·

Knowledge Representation

·

Natural language processing

·

Robotics

While strong AI has failed in its philosophical questioning to date, progress has been made on the weak AI side.

Informatics and Society

→ Main article: Computer science and society

"Informatics and Society" (IuG) is a subfield of the science of informatics and researches the role of informatics on the way to the information society. The interactions of computer science investigated in this context encompass a wide variety of aspects. Starting from historical, social, cultural questions, this concerns economic, political, ecological, ethical, didactic and of course technical aspects. The emerging globally networked information society is seen as a central challenge for computer science, in which it plays a defining role as a basic technical science and must reflect this. IuG is characterized by the fact that an interdisciplinary approach is necessary, especially with the humanities, but also, for example, with law.

A cohon map in learning

Home Router

Range of effects of technical computer science

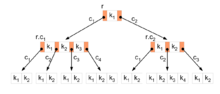

Sketch of a B-tree

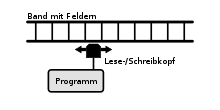

1-belt-turing machine

Basement vending machine

A DFA, given as a state transition diagram

Alan Turing memorial statue in Sackville Park in Manchester

See also

![]()

Portal: Computer science - Overview of Wikipedia content on the topic Computer science.

- List of important persons for computer science

- Society for Computer Science, Austrian Computer Society, Swiss Computer Science Society

- Computer Science Studies

Questions and Answers

Q: What is computer science?

A: Computer science is the study of how to manipulate, manage, transform, and encode information.

Q: What are some areas of computer science?

A: There are many different areas in computer science, including artificial intelligence, algorithms, computer architecture, databases, networks, programming languages, and software engineering.

Q: Why is mathematics important in computer science?

A: Mathematics is important in computer science because it provides the computational skills necessary to solve complex problems and develop algorithms.

Q: What skills are required to work with computers?

A: In order to work with computers, a person will often need mathematics, science, and logic skills in order to design, program, and maintain computer systems.

Q: What is the role of logic in computer science?

A: Logic is important in computer science because it helps to ensure that computer programs and systems operate correctly and efficiently.

Q: What are some examples of special machines used by computer scientists?

A: Some areas of computer science require special machines, such as supercomputers, quantum computers, and robotics.

Q: How is information transformed in computer science?

A: In computer science, information can be manipulated, managed, transformed, and encoded through various techniques and processes, such as algorithms, data structures, and programming languages.

Search within the encyclopedia