Computer memory

![]()

This article explains computer technology; for the figurative meaning in information psychology, see short memory capacity.

The working memory or main memory (core, main store, main memory, primary memory, RAM = Random Access Memory) of a computer is the name for the memory that contains the programs or parts of programs that are currently being executed and the data required for this. The main memory is a component of the central processing unit. Since the processor directly accesses the main memory, its performance and size significantly influence the performance of the entire computing system.

Working memory is characterized by the access time or access speed and (connected to this) the data transfer rate as well as the memory capacity. The access speed describes the duration until requested data can be read. The data transfer rate specifies the amount of data that can be read per time. Separate specifications can exist for write and read operations. There are two different notation forms for naming the main memory size, which result from the number basis used. Either the size is specified in base 10 (as decimal prefix; 1 kByte or kB = 103 bytes = 1000 bytes, SI notation) or in base 2 (as binary prefix; 1 KiB = 210 bytes = 1024 bytes, IEC notation). Due to the binary-based structure and addressing of main memories (byte-addressed with 8-bit partitioning, word-addressed with 16-bit partitioning, double-word-addressed with 32-bit partitioning, etc.), the latter variant is the more common form, which also manages without fractions.

Insofar as main memory is addressed via the processor's address bus or is directly integrated in the processor, it is referred to as physical memory. More modern processors and operating systems can provide more working memory than physical memory through virtual memory management by storing parts of the address space with other memory media (for example with a swap file, pagefile or swap, etc.). This additional memory is called virtual memory. To accelerate memory access - physical or virtual - additional buffer memories are used today.

.jpg)

Modern DDR4 SDRAM module with heatspreader, usually installed in gaming or desktop PCs

Basics

The working memory of the computer is an area structured by addresses (in tabular form), which can hold binary words of fixed size. Due to binary addressing, working memory practically always has a 'binary' size (based on powers of 2), since otherwise areas would remain unused.

The main memory of modern computers is volatile, which means that all data is lost after the power supply is switched off - the main reason for this is the technology of DRAMs. However, available alternatives such as MRAM are still too slow for use as main memory. For this reason, computers also contain fixed memory in the form of hard disks or SSDs, on which the operating system and the application programs and files are retained when the power is switched off.

The most common design for use in computers is the memory module. A distinction must be made between different types of RAM. While memory in the form of ZIP, SIPP or DIP modules were still common designs in the 1980s, SIMMs with FPM or EDO RAM were predominantly used in the 1990s. Today, DIMMs with e.g. SD, DDR SD, DDR2 SD, DDR3 SD or DDR4 SDRAMs are primarily used in computers.

1 MiB of memory in a 286 in the form of ZIP modules

Different types of main memory (from left to right) SIMM 1992, SDRAM 1997, DDR-SDRAM 2001, DDR2-SDRAM 2008

History

The first computers had no working memory, only some registers, which were built with the same technology as the calculator, i.e. tubes or relays. Programs were hardwired ("plugged in") or stored on other media, such as punched tape or punched cards, and were executed directly after being read.

"In 2nd generation computing systems, drum memories served as the main memory" (Dworatschek). In addition, in the early days, experiments were also carried out with rather exotic approaches, such as run-time memories in mercury baths or in glass rod spirals (fed with ultrasonic waves). Later, magnetic core memories were introduced that stored information in small ferrite cores. These were strung in a cross-shaped matrix, with one address line and one word line crossing in the middle of each ferrite core. The memory was non-volatile, but the information was lost when it was read and then immediately written back by the drive logic. As long as the memory was not written to or read from, no current flowed. It is several orders of magnitude bulkier and more expensive to manufacture than modern semiconductor memories.

Typical mainframes in the mid-1960s were equipped with 32 to 64 kilobytes of main memory (for example, the IBM 360-20 or 360-30), and in the late 1970s (for example, the Telefunken TR 440) with 192,000 words of 52 bits each (net 48 bits), or over 1 megabyte.

The core memory as a whole provided sufficient space, in addition to the operating system, to first load the program currently being executed from an external medium into the main memory and to hold all the data. Programs and data are in this model from the point of view of the processor in the same memory, the most common today Von Neumann architecture was introduced.

With the introduction of microelectronics, working memory was increasingly replaced by integrated circuits (chips). Each bit was stored in a bistable switch (flip-flop), which requires at least two, but with control logic up to six transistors and consumes a relatively large chip area. Such memories always consume power. Typical sizes were integrated circuits (ICs) of 1 KiBit, with eight ICs addressed together at a time. Access times were a few 100 nanoseconds, faster than processors clocked around one megahertz. On the one hand, this allowed the introduction of processors with very few registers, such as the MOS Technology 6502 or the TMS9900 from Texas Instruments, which performed most of their calculations in main memory. On the other hand, it made it possible to build home computers whose video logic used part of the main memory as screen memory and could access it in parallel with the processor.

At the end of the 1970s, dynamic random access memories were developed that store the information in a capacitor and require only one additional field-effect transistor per memory bit. They can be built very small and require very little power. However, the capacitor loses the information slowly, so the information has to be rewritten again and again at intervals of a few milliseconds. This is done by external logic that periodically reads the memory and rewrites it back (refresh). Due to higher integration in the 1980s, this refresh logic could be built cheaply and integrated into the processor. Typical sizes in the mid-1980s were 64 KBit per IC, with eight chips addressed together at a time.

The access times of the dynamic RAMs were also a few 100 nanoseconds for inexpensive designs and have changed little since then, but the sizes have grown to a few GBit per chip. Processors are now clocked in the gigahertz range rather than the megahertz range. Therefore, in order to reduce the average access time, caches are used and both the clock rate and the width of the connection of the main memory to the processor are increased (see Front Side Bus).

In June 2012, it was announced that a new smaller and more powerful design for main memory was to be developed with the so-called Hybrid Memory Cube (HMC), in which a stack of several dies is to be used. The Hybrid Memory Cube Consortium was founded specifically for this purpose and ARM, Hewlett-Packard and Hynix are among the members.

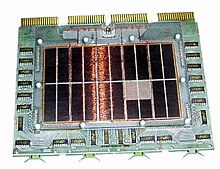

Magnetic core memory element, around 1971, capacity 16 Kibibyte

Working memory in the form of an IC on an SDRAM module

Questions and Answers

Q: What is computer memory?

A: Computer memory is a temporary storage area that holds data and instructions for the Central Processing Unit (CPU) to access.

Q: How does a program run?

A: Before a program can run, it must be loaded from storage into the memory so that the CPU has direct access to it.

Q: What is binary digital electronics?

A: Binary digital electronics is when transistors are used to switch electricity on and off in a computer, creating two states - On or Off, Zero or One.

Q: What are bits and bytes?

A: A single on/off setting in the computer's memory is called a binary digit or bit. A group of eight bits is called a byte.

Q: Where did the words bit and byte come from?

A: The words bit and byte were made up by computer scientists - "bit" comes from combining "bi" from binary with "t" from digit, while "byte" was changed from "bite" to avoid confusion.

Q: What is a nibble?

A: A nibble is half of a byte, consisting of four bits each. It was named as such because it was thought of as being half of a bite.

Q: Who came up with the word nibble?

A: The word nibble was created by computer scientists when they needed an appropriate term for half of a byte.

Search within the encyclopedia