Binomial distribution

The binomial distribution is one of the most important discrete probability distributions.

It describes the number of successes in a series of similar and independent experiments, each of which has exactly two possible outcomes ("success" or "failure"). Such series of experiments are also called Bernoulli processes.

If

The binomial distribution and the Bernoulli test can be illustrated with the help of the Galton board. This is a mechanical apparatus into which balls are thrown. These then fall randomly into one of several compartments, where the distribution corresponds to the binomial distribution. Depending on the construction, different parameters

Although the binomial distribution was known long before, the term was first used in 1911 in a book by George Udny Yule.

Binomial distributions for

with

The probability that a ball in a Galton board with eight levels (

Examples

The probability of rolling a number greater than 2 with a normal die is

The process described by the binomial distribution is often illustrated by a so-called urn model. In an urn, for example, there are 6 balls, 2 of them black, the others white. Reach into the urn 10 times, take out one ball, note its color and put the ball back. In a special interpretation of this process, drawing a white ball is understood as a "positive event" with probability

Definition

Probability function, (cumulative) distribution function, properties

The discrete probability distribution with the probability function

is called the binomial distribution for the parameters

![{\displaystyle p\in \left[0,1\right]}](https://www.alegsaonline.com/image/403c14a696bad2adffdf3b4b91494c89fb043180.svg)

Note: This formula uses the convention

The above formula can be understood like this: We need for a total of

The failure probability

As necessary for a probability distribution, the probabilities for all possible values

A random variable

where ⌊

Other common notations of the cumulative binomial distribution are

Derivation as Laplace probability

Experiment scheme: An urn contains

We calculate the number of possibilities in which

For each of the

Cases where exactly

Properties

Symmetry

- The binomial distribution is symmetric in the special cases

,

and

symmetric and otherwise asymmetric.

- The binomial distribution has the property

Expected value

The binomial distribution has the expected value

Proof

The expected value μ

Alternatively, use that a

Alternatively, one can also give the following proof using the binomial theorem: If one differentiates at the equation

both sides to

so

With

Variance

The binomial distribution has variance

Proof

or, alternatively, from Bienaymé's equation applied to the variance of independent random variables, considering that the identical individual processes

The second equality holds because the individual experiments are independent, so the individual variables are uncorrelated.

Coefficient of variation

From the expected value and variance one obtains the coefficient of variation

Skew

The skewness results to

Camber

The curvature can also be represented closed as

Thus the excess

Mode

The mode, i.e. the value with the maximum probability, is for

Proof

Let be without restriction We consider the quotient

Now α

And only in the case the quotient

Median

It is not possible to give a general formula for the median of the binomial distribution. Therefore, different cases have to be considered which provide a suitable median:

- If is

a natural number, then the expected value, median, and mode agree and are equal to

.

- A median

lies in the interval ⌊

. Here, ⌊ denote

the rounding function and ⌈

denote the rounding up function.

- A median

cannot deviate too much from the expected value:

.

- The median is unique and coincides with

round

if either

or

or

(except when

and is

even).

- If

and is

odd, then every number

in the interval

a median of the binomial distribution with parameters

and

. If

and

is even, then

the unique median.

Cumulants

Analogous to the Bernoulli distribution, the cumulant generating function is

Thus, the first cumulants κ

Characteristic function

The characteristic function has the form

Probability generating function

For the probability generating function we get

Moment generating function

The moment generating function of the binomial distribution is

Sum of binomial distributed random variables

For the sum

thus again a binomially distributed random variable, but with the parameters

Thus, the binomial distribution is reproductive for fixed

If the sum

This represents a hypergeometric distribution.

In general: If the

Relationship to other distributions

Relationship to Bernoulli distribution

A special case of the binomial distribution for

Relationship to the generalized binomial distribution

The binomial distribution is a special case of the generalized binomial distribution with

Transition to normal distribution

According to Moivre-Laplace's theorem, the binomial distribution converges to a normal distribution in the limiting case

It holds μ

As can be seen, the result is thus nothing but the function value of the normal distribution for

Transition to Poisson distribution

An asymptotically asymmetric binomial distribution whose expected value

A rule of thumb is that this approximation is useful when

The Poisson distribution is therefore the limiting distribution of the binomial distribution for large

Relationship to geometric distribution

The number of failures until a success occurs for the first time is described by the geometric distribution.

Relationship to the negative binomial distribution

The negative binomial distribution, on the other hand, describes the probability distribution of the number of trials required to achieve a given number of successes in a Bernoulli process.

Relationship to hypergeometric distribution

In the binomial distribution, the selected samples are returned to the selected set, so they can be selected again at a later time. In contrast, if the samples are not returned to the population, the hypergeometric distribution is used. The two distributions merge when the size

Relationship to multinomial distribution

The binomial distribution is a special case of the multinomial distribution.

Relationship to Rademacher distribution

If

This is used in particular for the symmetric random walk on

Relationship to Panjer distribution

The binomial distribution is a special case of the Panjer distribution, which combines the distributions binomial distribution, negative binomial distribution and Poisson distribution in one distribution class.

Relationship to beta distribution

For many applications it is necessary to use the distribution function

concretely (for example, for statistical tests or for confidence intervals).

The following relationship to beta distribution helps here:

This is for integer positive parameters

To solve the equation

to prove, you can proceed as follows:

- The left and right sides match for

(both sides are equal to 1).

- The derivatives with respect to

left and right sides of the equation, namely they are both equal

.

Relationship to beta binomial distribution

A binomial distribution whose parameter

Relationship to the Pólya distribution

The binomial distribution is a special case of the Pólya distribution (choose

Examples

Symmetric binomial distribution (p = 1/2)

·

p = 0.5 and n = 4, 16, 64

·

Mean value subtracted

·

Scaling with standard deviation

This case occurs for the

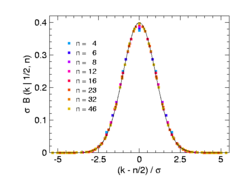

This is illustrated in the second figure. The width of the distribution grows in proportion to the standard deviation σ

Accordingly, binomial distributions with different can be

The adjacent graph shows rescaled binomial distributions again, now for other values of

This is the probability density to the standard normal distribution

The second graph on the right shows the same data in a semi-logarithmic plot. This is recommended if you want to check whether rare events that deviate from the expected value by several standard deviations also follow a binomial or normal distribution.

Pulling balls

There are 80 balls in a container, 16 of which are yellow. A ball is removed 5 times and then put back again. Because of the putting back, the probability of drawing a yellow ball is the same for all removals, namely 16/80 = 1/5. The value

So in about 5% of the cases you draw exactly 3 yellow balls.

| B(k | 0.2; 5) | |

| k | Probability in % |

| 0 | 0032,768 |

| 1 | 0040,96 |

| 2 | 0020,48 |

| 3 | 0005,12 |

| 4 | 0000,64 |

| 5 | 0000,032 |

| ∑ | 0100 |

| Erw.value | 0001 |

| Variance | 0000.8 |

Number of people with birthday at the weekend

The probability that a person has a birthday on a weekend this year is (for simplicity) 2/7. There are 10 people in a room. The value

| B(k | 2/7; 10) | |

| k | Probability in % (rounded) |

| 0 | 0003,46 |

| 1 | 0013,83 |

| 2 | 0024,89 |

| 3 | 0026,55 |

| 4 | 0018,59 |

| 5 | 0008,92 |

| 6 | 0002,97 |

| 7 | 0000,6797 |

| 8 | 0000,1020 |

| 9 | 0000,009063 |

| 10 | 0000,0003625 |

| ∑ | 0100 |

| Erw.value | 0002,86 |

| Variance | 0002,04 |

Common birthday in the year

253 people have come together. The value

| B(k | 1/365; 253) | |

| k | Probability in % (rounded) |

| 0 | 049,95 |

| 1 | 034,72 |

| 2 | 012,02 |

| 3 | 002,76 |

| 4 | 000,47 |

Thus, the probability that "anyone" of these 253 people, i.e., one or more people, has a birthday on that day is

For 252 persons, the probability is

The direct calculation of the binomial distribution can be difficult due to the large factorials. An approximation via the Poisson distribution is permissible here ( With the parameter λ

| P253/365(k) | |

| k | Probability in % (rounded) |

| 0 | 050 |

| 1 | 034,66 |

| 2 | 012,01 |

| 3 | 002,78 |

| 4 | 000,48 |

Confidence interval for a probability

In an opinion poll among

A solution to the problem without recourse to the normal distribution can be found in the article Confidence Interval for the Success Probability of the Binomial Distribution.

Utilization model

The following formula can be used to calculate the probability that

Statistical error of class frequency in histograms

The display of independent measurement results in a histogram leads to the grouping of the measured values into classes.

The probability for

Expected value and variance of

Thus, the statistical error of the number of entries in class

When the number of classes is large, becomes

For example, the statistical accuracy of Monte Carlo simulations can be determined.

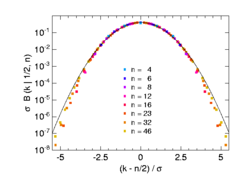

The same data in semi-logarithmic order

Binomial distributions with p = 0.5 (with shift by -n/2 and scaling) for n = 4, 6, 8, 12, 16, 23, 32, 46

Random numbers

Random numbers for the binomial distribution are usually generated using the inversion method.

Alternatively, one can exploit the fact that the sum of Bernoulli distributed random variables is binomially distributed. To do this, one generates

Search within the encyclopedia

![{\displaystyle {\begin{aligned}\operatorname {P} (Z=k)&=\sum _{i=0}^{k}\left[{\binom {n_{1}}{i}}p^{i}(1-p)^{n_{1}-i}\right]\left[{\binom {n_{2}}{k-i}}p^{k-i}(1-p)^{n_{2}-k+i}\right]\\&={\binom {n_{1}+n_{2}}{k}}p^{k}(1-p)^{n_{1}+n_{2}-k}\qquad (k=0,1,\dotsc ,n_{1}+n_{2}),\end{aligned}}}](https://www.alegsaonline.com/image/94d620c42183da7a282177dd40b3b98088ed0b2a.svg)