Transpose

In mathematics, a matrix (plural matrices) is a rectangular arrangement (table) of elements (usually mathematical objects, such as numbers). These objects can then be calculated in a certain way by adding or multiplying matrices together.

Matrices are a key concept in linear algebra and appear in almost all areas of mathematics. They clearly represent relationships in which linear combinations play a role and thus facilitate calculation and thought processes. In particular, they are used to represent linear mappings and to describe and solve systems of linear equations. The term matrix was introduced in 1850 by James Joseph Sylvester.

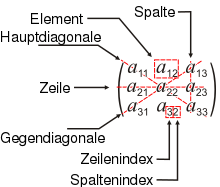

An arrangement, as in the adjacent figure, of

Designations

Terms and first properties

Notation

As notation, the arrangement of the elements in rows and columns between two large opening and closing brackets has become established. As a rule, round brackets are used, but square brackets are also used. For example

Matrices with two rows and three columns. Matrices are usually denoted by capital letters (sometimes bolded or, handwritten, single or double underlined), preferably

Elements of the matrix

The elements of the matrix are also called entries or components of the matrix. They originate from a set

A given element is described by two indices, usually the element in the first row and the first column is described by

Individual rows and columns are often referred to as column or row vectors. Example:

For singly standing row and column vectors of a matrix, the invariant index is occasionally omitted. Sometimes column vectors are written as transposed row vectors for more compact representation, thus:

Type

The type of a matrix is determined by the number of its rows and columns. A matrix with

A matrix consisting of only one column or only one row is usually considered a vector. A vector with

Formal representation

A matrix is a doubly indexed family. Formally this is a function

which assigns to each index pair

The set

Addition and multiplication

Elementary arithmetic operations are defined on the space of matrices.

Matrix addition

→ Main article: Matrix addition

Two matrices can be added if they are of the same type, that is, if they have the same number of rows and the same number of columns. The sum of two

Calculation example:

In linear algebra, the entries of the matrices are usually elements of a body, such as the real or complex numbers. In this case, the matrix addition is associative, commutative, and has a neutral element in the form of the zero matrix. In general, however, the matrix addition has these properties only if the entries are elements of an algebraic structure that has these properties.

Scalar multiplication

→ Main article: Scalar multiplication

A matrix is multiplied by a scalar by multiplying each entry of the matrix by the scalar:

Calculation example:

Scalar multiplication must not be confused with scalar product. To be allowed to perform scalar multiplication, the scalar λ

Matrix multiplication

→ Main article: Matrix multiplication

Two matrices can be multiplied if the number of columns of the left matrix is equal to the number of rows of the right matrix. The product of a

Matrix multiplication is not commutative, i.e., in general

Therefore, a chain of matrix multiplications can be parenthesized in different ways. The problem of finding a bracketing that leads to a computation with the minimum number of elementary arithmetic operations is an optimization problem. Moreover, matrix addition and matrix multiplication satisfy both distributive laws:

for all

for all

Quadratic matrices

Search within the encyclopedia